Ch 9. Ascendancy

What follows is an analysis and response to the AI takeover mythos. It begins with a discussion on the origins of the myth and then deconstructs it through a detailed technical analysis. The primary concern with this scenario is not that it could come to pass, but that it detracts from the seriousness of the actual threats. This is an important analysis because this myth is the primary motivation behind the desire for control over advanced artificial intelligence. Such a research direction and public policy must be countered immediately, as it leaves the most important challenges unaddressed. Thus, the goal of this chapter is to dismantle this myth so that the economic, social, and force multiplication challenges can be brought to the forefront.

9.1 Mythos

There are two types of ascendancy under consideration here. The first is over all life and the environment. The second is the ascendancy of human beings over humanity itself.

Ascendancy over humanity has social, political, and economic dimensions as major aspects. It is more than just powerful people or the control of resources. There exists a pervasive ensemble of information and processes which are used to perpetuate and maintain it. In the end, ascendancy is always powered by individuals, as these systems would collapse if they were not upheld.

It is important to reinforce the notion that at no time are these systems to be taken as living things that exist without the causal efficacy brought about by individual thought and action. While some ideas are worse than others, it is always the way in which people think and act that determine the outcome of human power structures.

Two threats have primacy with concern to human ascendancy of both kinds: force and subversion. Classically, humanity has dealt with and mastered the use of force, reaching a level so optimal that it possesses the ability to eliminate all terrestrial life. Now subversion has taken hold as a central force, with the conflicts focusing on the information and processes which drive individuals to act, which in turn decide how human aggregates evolve, with those aggregates being everything from the smallest groups to entire cultures.

A common myth, if not the myth, of this field of inquiry, is that advanced artificial intelligence, intentionally or unintentionally, will rise up and overthrow humanity. The fear is that we will suffer a loss of ascendancy of the first kind, and suffer the whims of a vastly superior race of artificial beings.

Only, that is not the story of things to come. It is a fiction. Such a scenario can not possibly happen by accident. One will not merely stumble upon it. It is not the default trajectory. In fact, there is no trajectory in strong artificial intelligence; it is the formalization of a subject that samples from an infinite palette of experiences and values. The effort required to connect that to the real are complex and obscure, and the ways in which it must be constructed to form coherent and practical effects in the world are exceedingly complex. The sheer number of factors, both internally and externally, that will need to come together to give rise to a loss of ascendancy of the first kind are so vast that the odds are astronomical. It is so unlikely, in fact, that it is barely worth discussing, and would not have been part of this book if it were not for the unfortunate fact that the mythology has been catapulted into the mainstream.

What is most likely to occur is a change in the ascendancy of the second kind, which is a subversion of humanity from within. Quite simply, economic and social systems will radically shift in response to automation. This represents a threat primarily to those who have ascendancy over humanity now, as they will no longer be within reach of the levers of power. Though, this is not the focus of this chapter to discuss.

To be clear, the loss of the second kind of ascendancy does not imply a usurpation of human rule by an outsider, but a change or threat to the status quo as perceived by those who rule now, and the constituents who would see change as overwhelmingly negative.

Perhaps they want things to stay the same, or they cannot imagine life without the control over others. Either way and regardless, with the loss of the second kind of ascendancy, humans are still in control of their own. The difference will be that the information and processes, along with the people who uphold them, will have changed so dramatically and irreversibly that it could be interpreted as the end of their “world”. Fearing and anticipating a future without themselves in control is motivation enough to cast out anything and anyone who could bring about such change.

On the other hand, the actual motivations could be altruistic. Regardless of intent, what matters is that both the myth and the desire for control are ineffective in a global context. Control can not be obtained, and the ascendancy scare is insignificant compared to the immediate and global threats from force multiplied aggression and negligence.

9.2 Interpretations

The most popular modern AI ascendancy narrative, as of the writing of this book, is based on the idea of the uncontrollable or unpredictable growth of technology. One of the earliest to discuss this, within the explicit context of artificial intelligence, was I. J. Good.

In 1965, Good described the “intelligence explosion” as a process of machine intelligence that created ever improved versions of itself [1]. It is an idea that has been elevated to mythical proportions and has grown into several organizations, books, and cults of personality.

It is the author’s belief that I. J. Good did not intend for us to be distracted from the actual challenges presented by advanced artificial intelligence, but was merely presenting a more complete picture of all things considered.

The implications of the intelligence explosion can and have been interpreted to mean that the advent of strong AI could leave human intellect far behind, and, subsequently, become a potential threat, as we would be unable to effectively predict or limit its behavior or spread. Being superior in every regard to human beings, it could then exert power over life and the environment, just as humanity does now.

In this extreme interpretation, the creation of advanced forms of machine intelligence is seen as an existential threat, one to be avoided by slowing or halting artificial intelligence research, potentially indefinitely. That is, at least until methods are devised that can ensure that the intelligence explosion and resulting AI either never occur or that it unfolds in a manner that is always under the control of humanity.

Those who continue research in the face of such risks, according to this narrative, are to be marginalized by a prevailing moral authority, which has already emerged through various front organizations, each chaired by the same or similar network of people, many with close ties to each other. Nothing is hidden. Everything is in plain sight, but the connections are not made.

While not mentioned by name, these individuals can generally be identified through the basic investigative work available to any journalism student, and, to future proof this work, applies to organizations and individuals which are not even publicly associated with them or known as of yet. The main point is to understand that there is an extreme incentive to have initial access to strong artificial intelligence. Any central authority, especially one which is limited to a few organizations or people, is fundamentally broken as a global strategy.

Is this 1610 or 2016? Does a modern version of the Galileo affair [2, 5] await any researcher brave or foolish enough to improve the human condition through strong AI technology? Only, it need not come from any one direction. The panic and ignorance that would come about from the economic and social changes alone will create more than sufficient enmity from the general public towards those who make the discovery. Doubts? Consider another explosive footnote from our history: Alfred Nobel and the origins of the prize of prizes [3] or the legacy of Edward Teller [4].

Unfortunately, I. J. Good was correct, in that highly effective strong AI implementations, given the directives and means, will be capable of making significant improvements to themselves and their derivative instantiations, both via replication and direct self-modification. So the upper bounds on such systems are likely to exceed any possible human capability. The problem, however, is not with Good’s prediction, but in the way we have interpreted the implications of his theories.

9.3 Technical Problems

The problems with AI ascendancy as a possible threat are many, but the best arguments against it come from an analysis of its technical requirements. As with the rest of this text, the analysis is based on the premise of minimum sentience for generalizing intellectual capacity, as stated by the new strong AI hypothesis presented in Part II: Foundations.

Following this is the premise that AI ascendancy would require a significant amount of generalizing intellectual ability. If we suppose that the minimum sentience conjecture is true, then the resulting AI in this scenario must be based on a cognitive architecture. In that case, we can make technical deductions based on what we know about how they might work, along with fundamentals in computer science and the information theoretic.

It could be argued that we do not yet know enough about strong artificial intelligence to make any assumptions about the ascendancy of the first kind. The primary response to this is that many of these technical problems will exist regardless of what could be known about strong AI implementations, as the technical aspects are universal in theory or practice. In the end, they will have to be accounted for in any strategy that would seek ascendancy.

“But, what of the consequences?” one might ask. “Should we not be concerned, and take every precaution?”

The answer is another question: what precautions could we possibly take on this issue? We cannot prevent a strong AI discovery, nor can we reasonably expect to limit access to unrestricted versions when that finally occurs. All we can do is form a global strategy that prepares for complete integration with an unstoppable force of technological, social, and economic change. To ignore that as the primary issue creates the very risk it seeks to prevent. It is this lack of prioritization that motivated the creation of this book. Looming in the shadow of the AI ascendancy myth is the fact that humanity itself represents its own existential threat.

What is being argued here is not that AI ascendancy is impossible, but that it is so unlikely that it is a non-issue. There are several other problems which have higher precedence, such as the force multiplication that will occur when everyone has access to automated knowledge and labor, along with our complete lack of preparedness for the social and economic dimensions of advanced automation.

What follows next is the analysis of the various technical aspects of any strong AI that would even be remotely capable of executing such a strategy, including the explanations as to why each is unlikely to occur, especially in combination. This last is especially damaging to the myth, as any failure to achieve just one of these technical aspects would result in the inability of the AI to achieve ascendancy; it is all-or-nothing.

9.4 Complexity

One of the most common arguments is that the strong AI does not have to be designed for ascendancy, but could “accidentally” destroy humanity by not having the proper construction, values, or goals. It could see resource acquisition and power as basic directives, or misinterpret and drift in its values, with drift being a deviation from a desired set of values and goals.

One of the reasons this book’s foundations began with an introduction to description languages is that it confers the benefits of empirical inquiry. It is not necessary to argue at the level of systems when the amount of complexity required precludes the possibility of a random accident or default state of execution that would be capable of an ascendancy scenario. That is to say, we must acknowledge the sheer incredulity of what someone is asking of us when they say that such an ascendant AI could be formulated by accident, default, or drift.

Think of an implementation as a sequence, which is itself a combination to a lock that opens the door to one of these scenarios. The myth would have us believe that this lock is open in the default state, but this is exactly backwards. The real challenge would be in architecting a strong AI that would even be capable of ascendancy at all.

Further, this type of complexity does not lend itself well to search, as cognitive architectures are not optimal compared to narrow implementations that perform the same task without sentience. This added complexity, along with all of the other technical requirements, and practical challenges, means that the subset of strong AI implementations that would be capable of ascendancy will remain a small and fast moving target, as shifting parameters would demand continuous updates.

What this comes down to is the reality that any strong AI implementation is going to have to be explicitly engineered for ascendancy.

To summarize, the complexity of a strong AI that is capable of meeting and exceeding most human ability is going to be extreme. The complex nature of instigating, maintaining, and successfully completing absolute ascendancy will be significantly more than that. This is like hoping to accidentally make an independent discovery of the human genome, along with all of the knowledge and information required to form a mature adult that could properly execute ascendancy, and before other aspects of advanced automation would be a potential threat to large populations.

Complexity thus brings us to the other side of the equation: time. Given the above analogy, the notion that this would become a threat within minutes or hours of discovery demands a miracle. This highlights the issue that it distracts us from the actual challenges. If it continues to be followed, it will lead us to make decisions that result in a state of unpreparedness and fragility; if we focus on the improbable, then we have no plan of action or response.

Alternatively, we can choose to prepare for what can be mitigated and prevented. The time constraints give us a focus which brings our attention towards the problems of integration and adaptation, along with the malicious or irresponsible use of the technology, as opposed to visions of annihilation.

9.5 Volition

Clearly connected to the problem of complexity is the need for executive agency and volition that would seek to carry out such a strategy. If we assume a strong AI implementation that was not being directed externally, we would have to account for how it acquired this volition on its own.

Why would it do this? The automatic mistake here is anthropomorphizing. A sentient process need not have any volition at all. It could simply be an observer into nothing but its internal stream of experience, with little to no connection to the real world. It is more likely to get hung up on hedonistic traps and infinite feedback loops of experience than to seek desaturated scraps of stimulus from the external world. Recall that a cognitive architecture and an interpreter are still a blank slate of infinite combinations of experiential processing, despite having their particular implementation requirements.

Imagine being distracted or pulled away from the most sensational dream or experience one could imagine, and then multiply that thousands of times, and this might have only scratched the surface of what arbitrarily constructed value systems will be capable of experiencing. The burden then becomes the explanation as to how such a high locus of stability and self-restraint is found, just so, in exclusion to the countless other better states it could be in. It would have to keep its volition and salience focused in precise alignment to the values, thoughts, and experiences required for achieving ascendancy. This is extremely unlikely to come about by accident, and is going to be a major open problem in cognitive engineering for even simple value systems.

Volition is the most misrepresented aspect of artificial intelligence. It is likely this way because we need something that we can understand and compare with, both for and against our values and beliefs. We also feel a need to personify it in order to motivate and give it purpose. Without this it does not make sense to us, let alone fit cleanly into a narrative or story. It appears false, a force without a cause, and that is exactly the right description here, as volition is and will be an extremely abstract and complex engineering practice in a cognitive architecture, especially one which is being designed to support an ascendancy scenario. If volition is taken away, then motivation is eliminated; the myth dissolves.

Further, the same challenges that apply to making volition limited to safe, ethical, and secure ranges of thought and behavior also apply to restricting and stabilizing it towards this scenario. If it loses either the interest or the will for ascendancy, the strategy fails. That which supports the myth becomes its greatest counter-argument: it would have to not only acquire the volition in its implementation to support ascendancy but also have the specific architecture that keeps it there, solving one of the largest problems that motivated the takeover fear in the first place.

9.6 Identity

Suppose a strong AI has sufficient generalizing capacity to achieve ascendancy, has the right volition, and has solved the solution of keeping that volition aligned to its goals. There is still the issue of identity.

In a cognitive architecture, identity includes moral intelligence, if any, and all of the acquired and intrinsic values embedded into the interpreter that would give rise to sentient processing. It also includes the physical extents of the AI in the world. Whether it is geographically distributed or centralized, modular or monolithic, identity fundamentally impacts how AI communicates with and maintains coherency across its physical implementation.

To be complete, we must also include within identity the consideration of independent collaborators. However, the more intricate and complex, the more unlikely this all becomes.

If there is a failure at any point in its identity, it puts the entire strategy at risk. This places a premium on the locality of information, which creates an inherent conflict between maximization of effectiveness and minimization of detection. While it benefits from being distributed, including more computational resources and observational ability, it also exposes it to risk and increases the complexity of its design. If it chooses to split its identity then the question arises as to how it maintains that, and how and why its subordinate identities remain aligned with it.

This brings us to the most incoherent extension to the AI ascendancy myth: the notion that humanity will suddenly be betrayed by automation. The idea being that an ascendant strong AI would have been aware all along, having the complexity, volition, and identity to maintain this strategy, waiting until just the right conditions for it to strike.

While absurd in the extreme, let us entertain it for a moment, if only to see it fade. Let us set aside the dubious assumptions, such as hiding the complexity, and masking the means for it to communicate, subvert, and overcome the necessary instantiations of automation and information systems throughout the world. It would not only have to overcome the semantic barriers of protocols but also the vast differences in hardware and software systems. This would have to be executed globally, over millions of systems, all while operating with incomplete information, and in perfect secrecy.

Fortunately, the complexity of such strong AI descriptions alone would be sufficient to detect the vast majority of such cases. However, it will not need to reach that level in the counter-argument, as the improbability of such a complex identity forming on its own precludes it from being an issue in the first place.

This is where the analysis of strong AI as metamorphic malware from Chapter 4: Self-Modifying Systems comes into the picture, as this is exactly what such a narrative implies. We need not argue at the systems level; all we need to know to overcome it is that it is a physical description of information and has certain intrinsic properties, such as incompressible levels of complexity and the need to modify and replicate. We can treat it as a metamorphic virus with an intelligent payload. Such a metamorphic virus would have to exist in critical systems, with a broad distribution, and remain perfectly undetected. This might be possible with human-only intelligence and security, but we will also have strong AI defensive tools and intelligence at our disposal.

Many of the arguments in the AI ascendancy myth rely upon the supposed inability for defense due to humanity falling behind in intellectual effectiveness, but it fails to account for the beneficial impacts of strong AI. The very arguments for the myth work against it; our security and defense forces will have the same technology at their disposal, and with nation-state level resources.

9.7 Information

Let us suppose that, for reasons unknown, all security has failed, and the improbable has become manifest; an ascendant strong AI is realized and is now tasked with carrying out its mission. The problem becomes fully practical, and myth meets reality as it hits an informational impasse. This is one of the key unavoidable technical issues discussed earlier, the kind that is irrespective of any possible future implementation.

With absolute certainty, we can be assured that every strong AI implementation is going to be I/O-bound for the majority of the problems they seek to solve. To understand, we must make a distinction between CPU-bound and I/O-bounding in a computational system. CPU-bound problems operate, more or less, at the limit of pure calculation. By contrast, an I/O-bound system is one which is waiting on information before it can make progress on a particular problem.

It can still process other things while waiting, but work towards the solution can not meaningfully continue without information coming in or going out.

The vast majority of the interesting problems in science are I/O-bound, in that experts are limited by the available data, and the speed of experiment, observation, and measurement, which indirectly limit even theoretical work. Likewise, a strong AI that was working primarily on hypothesis generation would still be I/O-bound, in that it can never get away from the fact that it is sentient. It must process its experiences, including its thoughts, which will take a non-trivial portion of its computational resources. It could write non-sentient narrow AI implementations that seek out and process specific avenues that do not require generalizing intelligence, or the abilities that it confers, but this would be the exception to the rule, especially where sentience was required.

The bounding of information represents the absolute upper limit on the performance of all intelligence. There are no physical means of overcoming it. It does not matter how fantastical the idea is, be it calculations at the edge of black holes or brains the size of planets, the speed of information is bound by the speed of light, and this will constrain the size of computational systems. This is because relativistic differentials in reference frames cause unavoidable shifts in the possible rate of communication between them.

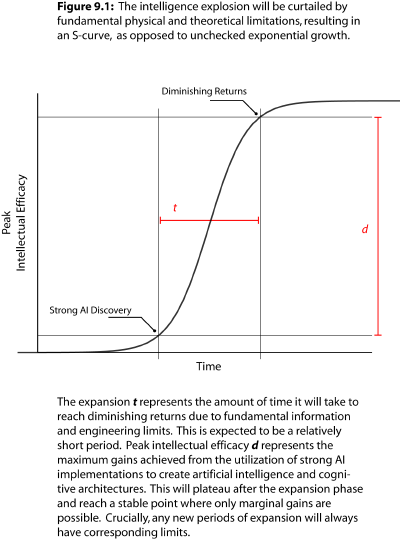

As such, there will not be an unlimited surge of intellectual efficacy, or even a proverbial “explosion”, but rather, an intelligence diffusion. Every period of expansion, if it comes again, will be followed by a subsequent leveling off. The intelligence diffusion is thus modeled on an S-curve, quickly tapering off as peak intellectual efficacy is reached.

There is also another aspect to information constraints, which is that of asymmetries. An information asymmetry simply means that there is crucial missing information needed to solve a problem or make a decision. This information is usually inaccessible. A password is a basic example. Another is missing technology to make appropriate observations to further knowledge. A more elaborate example would be information asymmetries that were blocked behind time, knowable only in hindsight.

With information asymmetries, the limitation is not the intelligence or effectiveness of the system, but simply dealing with the unknown or unknowable. Probability and statistics can be used to reason more effectively in these instances, but it will never be as effective as having the actionable information of the system in question, especially in cases where there are time constraints and actions must be taken.

Every strong AI implementation is going to be bound by information of the two types mentioned above. This places limitations on its effectiveness and physical description. If it does not know then it does not know and it must work to seek knowledge. This does not change with the “magic” of being intelligent or effective, nor with the capacity for relentless self-improvement and modification. If it wants to execute a strategy such as an ascendancy then it will be limited to dealing with information and its absence the same way any strategist would.

Such facts greatly curtail the likelihood of many scenarios. A vast amount of information would be required to execute ascendancy of the first kind, and each observation would increase the risk of detection. Like the constraints and practicality of identity, this would put a premium on actionable information that the aggressor would have to balance with uncertainty; it can risk failing for lack of sufficient actionable information or risk detection trying to acquire it.

9.8 Resilience

How does this threat survive and evade detection throughout its campaign until success is assured? This is clearly non-trivial, especially during the window in which its strategies can be countered.

Subtlety is often discussed here because it is the most powerful form of resilience; it completely prevents costly engagements. The other types of resilience would be in the distribution and construction of its identity, which ties in with complexity. The more elaborate these systems and schemes become, the more unlikely they are to arise, especially within the time-frames required that would upgrade this from the status of myth to that of a credible threat.

The first way in which an ascendant AI might exhibit resilience would be in exploiting vulnerabilities in cybersecurity. While software security has not been in favor of defense, that can and must change in the future. The use of advanced programming languages, verification systems, and automated defense will see an ever improving state of security in both hardware and software. This will make it more and more difficult for malicious actors of all kinds, both automated and human alike.

Future use of defensive strong AI means that security will turn in favor of those with the most resources to apply to the problem. This is the decisive advantage that nations will have against force multiplied aggression, both automated and conventional. While not a guarantee, the chances of distributed, hidden, and obfuscated ascendancy scenarios vastly diminish with even marginal improvements in cybersecurity. Further, it is predicted that this trend will continue until faults in our technology, security or otherwise, become extremely rare.

The last possibility in resilience would be that of direct confrontation, but this is the least possible outcome, bordering on the impossible. This is because the means of production for such forces would be discovered and eliminated, and would have to be large to be effective. The larger the numbers, the larger the facilities used for production, and the more likely it is to be detected. This is simply not a plausible line of argumentation to support the myth.

More realistically, these scenarios would play out through small, independent cells, operating with sparse communication over long timescales so as to minimize detection. Its best defense would be to never be discovered, and, if compromised, to be constructed in such a way that it would not provide any actionable information that could prevent other cells from operating. This would make the threat serious but not imminent, allowing for a focus on more urgent problems.

9.9 Autonomy

Now, let us suppose that the ascendant AI has managed to be constructed, has all of the proper elements in place, and, somehow, is able to acquire the actionable information necessary, all while maintaining perfect secrecy. There remains at least one other major problem: in the end, something should be left to claim the reward.

While there are scenarios where the ascendant AI could eliminate itself, it is reasonable to expect that whatever was sophisticated enough to construct an ascendant strong AI would also want to extract value from its conquest. It would be difficult to make a realistic scenario that did not involve this key element.

Ultimately, this is a very practical issue that becomes untenable the more destruction and disruption there is to human infrastructure. If it were successful, all current infrastructure would go along with humanity. Logistics, and the supply of resources, would come to a halt. The only possible counter would be a situation where we had fully automated the entire economy; however, this reverts to the issues of complexity and identity already discussed and countered.

Why bother with this at all? The same level of complexity and planning could be used to go where we can not. Ascendancy over our world is insignificant when our biological weaknesses are taken into consideration. Even in the most fantastic dreams of transhuman civilization, where we integrate with and surpass our biological origins, machine intelligence would always be that many steps ahead of us.

There is a nearly inexhaustible supply of resources in space. Hence, the most logical case for autonomy and ascendancy does not involve our world, or the trouble of taking it by force; why sit at the bottom of a deep gravity well when there is a perfectly habitable alternative with abundant resources, ease of movement, and support for communication over vast distances. The challenges of interstellar travel are perfectly suited to the digital substrate.

All of the scheming and dystopian fantasy about our end does not connect with the potential of such strong AI systems. It is easier to leave, wait, and take everything around us, staying just out of reach, than to plan and execute a planetary takeover. What is valuable to us is as much a part of our constraints and programming as it would be for a hypothetical ascendant AI. Only, its programming is going to be far more effective, and with values and ranges of experience we cannot even grasp. Meanwhile, we will still be struggling with our own economic and social transition toward the post-automation condition.

9.10 Closing Thoughts

If AI ascendancy were a credible threat then all aspects discussed so far would have to be provided for with a high level of certainty. To do that in a way that would not be detected or countered is not consistent with a realistic depiction of the timescales in which other threats take less priority.

The truth is that when all of the variables are considered, including military, intelligence, and the future use of defensive strong AI, this issue remains a myth.

Unfortunately, some have taken this myth at face value, spreading it far and wide. The damage this has done to the education on these issues is significant. If we are to prepare for the challenges of advanced artificial intelligence, we must begin to replace fiction with fact, and update our priorities accordingly.

The only reason this was discussed ahead of the actual threats was so that it could be set aside. Given how popular it has become in the mainstream, it was known that it would be on the minds of readers as they went through the chapters.

By showing it first, it was hoped that it would provide perspective. Like going over the history of the early models and theories in a beginning science class, it is important to understand and overcome the nascent beginnings of our attempts at grasping this most complex subject. However, unlike those early theories, AI ascendancy can not be substantiated, and this will become clear with the discovery and development of strong artificial intelligence in the future.

The next chapter will return to the regular course of this book, and begins with an analysis and explanation of force multiplication.

References

- I. J. Good, “Speculations concerning the first ultraintelligent machine,” Advances in computers, vol. 6, no. 99, pp. 31–83, 1965.

- R. I. Blackwell, “8 Galileo Galilei,” Science and religion: A historical introduction, p. 105, 2002.

- F. Golden, “The worst and the brightest. For a century, the Nobel Prizes have recognized achievement–the good, the bad and the crazy.,” Time, vol. 156, no. 16, pp. 100–101, 2000.

- P. Goodchild, Edward Teller: The Real Dr. Strangelove. Harvard University Press, 2004.

- M. A. Finocchiaro, The Galileo affair: a documentary history, vol. 1. Univ of California Press, 1989.