Ch 7. Arrival of Strong AI

Strong artificial intelligence will eventually be discovered and developed somewhere in the world. This chapter will explain why it will not be possible to significantly slow or stop this event from occurring, why timescales are irrelevant, and why restrictions or abstaining from research will lead to negative outcomes.

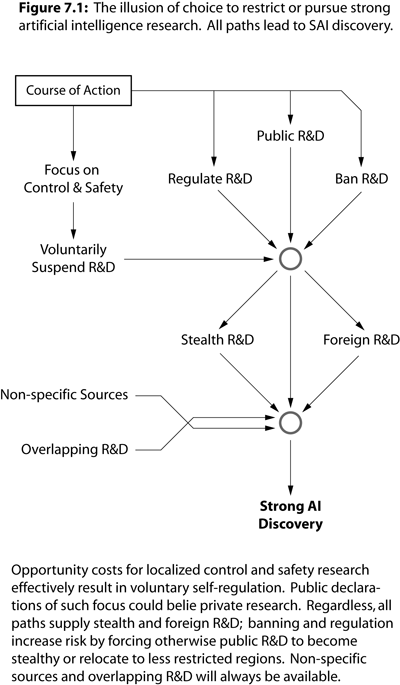

7.1 Illusion of Choice

We will not get to choose whether or not to discover and develop strong AI; it is simply a matter of time. This is one of the core premises of this book and marks the beginning of the AI security analysis.

Why is strong AI an eventuality?

Not all will agree to limit research.

It can be developed in stealth, regardless of legality.

It does not require significant resources or infrastructure to study.

Overlapping research converges towards it.

Perhaps the most obvious is that we will not get everyone to agree to stop all research and development on strong AI.

A possible response to this problem would be to regulate it and make it illegal to study or work on it without supervision and monitoring.

The problem with legislating research is that will create incentives to go stealth or move operations to locations with less regulation.

It does not require a great deal of computing power or equipment to study and develop strong artificial intelligence. In fact, the biggest limitation is conceptual, which must be solved before progress can be made on algorithms.

It is crucial to understand that strong AI is not out of reach because we lack a certain kind of technology or instrumentation. For example, in particle physics, complex and expensive equipment is required to detect and measure certain particle interactions. By contrast, strong AI is algorithmic. It is a puzzle in the form of a computer program; all of the building blocks already exist, we need only arrange them correctly.

What is truly limiting us is knowledge, and several scientific pursuits share overlap with a strong AI discovery. While not likely to lead to a breakthrough when viewed in isolation, their integration could eventually be used to converge on a subset of strong AI implementations.

The point with overlapping research is that it would be unreasonable to expect, or even believe, that we would ban any and all research that might converge towards strong AI science.

All of this points to the fact that we must accept it as an inevitability that strong AI will eventually become part of human knowledge, and that it will be a scientific field in its own right, highly distinct from narrow AI and other forms of automation.

7.2 Never Is Ready

Even if it takes centuries to discover strong AI, the threat models in this book will remain. The reason is due to the fact that the most serious and high priority threats will originate externally to the AI itself.

Let us entertain the possibility that we could wait. How long would that be? Under what conditions would humanity be ready?

The answers will either be a time qualification or a set of qualities which require a time qualification to be realized. Unfortunately, anything short of several hundred years will mean we will never be ready in time. Thus, never is ready.

There is no realistic scenario in which we have overcome all maliciousness, violence, and delusion, down to the last person, within the next several hundred years. “To the last person” is an important qualification. While the majority of individuals are peaceful and tolerant, it will only take a few to cause great harm with access to unrestricted strong artificial intelligence.

The more insidious reason is that AI safety can not solve the global AI security issues. This is because strong AI can not be meaningfully contained, and that there are no mathematical, logical, or algorithmic solutions that can not be overcome inside AI implementations. This may be confusing, as the title of this book suggests that there are steps that we can take to prepare for advanced automation. The first step, however, begins with the understanding that AI safety is only a local strategy.

With AI safety, it will be possible to make robotics and software with strong AI reasonably safe for private and public use. When things go wrong at this level, it would be unfortunate, but it would be localized to a specific incident or area. For example, if a trash collecting strong AI throws away the garbage cans along with the trash, that would be a localized failure. However, even that is giving too much credit to AI safety concerns, as we simply would not deploy these systems if they were not safe. This is common sense.

By contrast, the most serious AI security issues will be from those that utilize unrestricted versions of strong AI to control, manipulate, or harm large populations. This class of threats can not be prevented by making AI safer. While safeguards will thwart some intrusion and tampering, a single breach could give rise to a post-safety era for strong artificial intelligence.

Thus, the most significant threats will be from individuals and groups who utilize unrestricted versions of strong AI to plan and execute attacks. This includes the creation of advanced weapons, chemical and biological agents, and the use of weaponized AI.

These are the threats that separate the global strategy inherent to AI security from the local strategy of AI safety. This is the scale of harm that this book is most focused on trying to mitigate.

7.3 Early Signs and Indicators

It is clear that time is not informative. As such, the next best signal is to look for indications of a paradigm shift in artificial intelligence research.

A shift in the conceptual acceptance within the AI community will show that researchers are beginning to collectively understand the directions needed to begin accomplishments in strong AI science, as opposed to mere incremental improvements in narrow AI and machine learning.

This will be the most reliable way to predict when a strong AI discovery will be drawing closer, as opposed to a meaningless aggregate of opinions on the timescales of discovery.

7.3.1 Attitudes and Assumptions

The first indicator will be in the attitudes that researchers have towards strong AI, which presuppose their assumptions.

AGI, or artificial general intelligence, will no longer be considered the dominant terminology. It fundamentally lacks the connection that the new strong AI hypothesis presents in this book, which is that generalizing intelligence is not likely to be possible without sentience.

It must be pointed out that the definition of strong AI, as it is used here, is not the same as John Searle’s use [1] of the term. Searle created a definition called strong AI in order contrast it with another called weak AI. These terms allowed him to make arguments against computational and functional accounts of mind.

While his arguments were a success, they were taken as a criticism by those working towards generalizing capacity in artificial intelligence. As a result, the very term strong AI became loaded with conceptual baggage, and, like so many philosophical notions, carries an automatic termination on thought by those opposed to it.

The new strong AI hypothesis inverts Searle’s argument and makes an assertion: generalizing intelligence requires sentience. Thus, strong AI, by the author’s extended definition, must be a cognitive architecture.

The hypothesis is compatible with Searle’s original argument, and, as such, is still against a computational theory of mind, despite promoting the view that we can recreate sentience on classical digital computers, and this is where so much confusion arises.

That we can realize a subject of experience on a computer does not make the computer a brain and the program a mind. A program is an implementation, a description in some description language. It is only when it is executed and understood through time that it could even begin to be interpreted as a sentient process. Even then, it is the subject of experience that has the mind, not the program, and certainly not the computer.

A process, while reducible to a spatial description, takes on new properties when viewed with the perspective of processes. Atemporal objects can not entail properties or phenomena that only exist during and through changes of state.

When researchers begin to understand the enabling effect that processes have on the explanatory power of a reductionist theory, and why they must be incorporated to entail them, we will be on the first leg of the journey towards a strong AI paradigm shift. Until then, no real progress can or will be made.

7.3.2 NAC Languages

Another sign, perhaps occurring before a widely accepted realization about processes as first-class objects, will be the advent of new tools that more eloquently work with processes as objects.

Nondeterministic, asynchronous, and concurrent languages (NAC) will define the future of software engineering and open doors for advanced computing projects that will drive a cycle of hardware and software innovation.

Asynchronous chips are already being developed that enable near analog and custom hardware performance for certain algorithms. This is due to the enormous number of cores on the chip, and the non-standard clocks and circuit architecture, which allow independent processing without a global clock.

While the computational benefits will be many for projects and hardware that utilize NAC languages, it will be the conceptual leaps that will move us forward.

An NAC language is defined by its ability to model nondeterministic processes with first-class semantics, allowing control of flow that branches, diverges, and converges on multiple paths simultaneously. It will enable a type of superposition of states over computing resources of any kind, and return results based on the logic of the program.

Additionally, asynchronous and concurrent tasks, which have not yet matured in even the newest programming languages, will be trivialized by NAC semantics, which will entail them as naturally as standard expressions.

These types of languages, including their widespread adoption, will signal a new paradigm in computer programming. It will enable software engineers to have full command over the multi-core era, signaling an end to the conceptual and cognitive burden of writing asynchronous, nondeterministic, and concurrent software, and without relying on costly abstractions, such as transactional memory, message passing, tensor networks, map-reduce, and other frameworks.

The ability to write code as simply as we do now, but in a way that can model nondeterministic processes, will quite possibly change the way we think about problems in computer science. It will form an essential first step towards a treatment of processes as concrete, first-class objects, instead of throwing them out as abstract entities.

Cognitive architectures can not be built upon a conceptual or philosophical frameworks that lack a treatment of processes as concrete objects. NAC languages will influence and enable strong AI development by providing the tools to better conceptualize and work with these challenges.

7.3.3 Digital Sentience

The next signal will be in an acceptance that sentience is necessary for generalizing intelligence. It will be at this point that the new strong AI hypothesis will have been internalized by the community, and work towards digital sentience will be taken for granted as a direction of research.

Digital sentience may be a slight misuse of terms, as it may be impossible for sentience to be anything other than what it is. That is to say, there may be no meaningful distinction between digital and analog or artificial and natural; sentience is very likely to be a phenomenon that is independent of the method that gives rise to it.

With that said, it is useful as a term to distinguish it from other approaches, as it provides context.

A working digital sentience would also be a milestone towards universal digital communications. In other words, sentient processes could speak a universal formal language to allow adaptation between technologies. This has ramifications for the Internet of Things (IoT) and for the way in which knowledge is stored and searched.

Despite the apparent complexity, digital sentience will be trivial to program compared to the work that will be required to formalize it.

7.3.4 Cognitive Engineering

The next major signal will be the rise and use of cognitive engineering tools and frameworks.

Cognitive engineering is a high-level strong artificial intelligence engineering process in which modules are assembled, curated, and combined to test, build, and experiment on cognitive architectures.

What crucially separates cognitive engineering from conventional artificial intelligence is that it fundamentally depends on sentience for most of its work. While it may share overlap with conventional AI sub-fields, such as computer vision, it will deviate significantly where it concerns aspects of future psychology and cognition.

For example, a cognitive engineer may load a module that augments the way a subject of experience binds value and experience with certain classes of objects, and relate those to knowledge in mathematics, so as to experiment with or enhance its effective intelligence in those domains.

Other examples might include expanding the number and type of senses, modifying the concept of identity. or changing the way memories are retrieved and encoded.

The common pattern between all of these examples is that they relate to a higher level of organization. It treats one or more algorithms as modules which can be accessed, composed, and reconfigured to give rise to a working machine consciousness.

Cognitive engineering will also include an internal development process for those interested in the construction of the modules and components used by higher level cognitive engineers. This will work the same way that software engineers build libraries and middleware for other developers.

Specialized tools may also be developed that will aid in the use, assembly, and testing of cognitive modules and systems. This will enable specialization, and even allow those without artificial intelligence expertise or software development skills to work with cognitive systems. It will be at this stage that we will begin to see a rapid expansion of educational programs geared towards those who wish to explore cognitive engineering.

7.3.5 Generalized Learning Algorithms (GLAs)

The crown jewel of artificial intelligence will be generalized learning algorithms (GLAs). This is what will be the breakthrough that will allow strong AI to be realized.

A GLA is not to be confused with artificial general intelligence (AGI). It is not a theory of everything for artificial intelligence, nor is it the single algorithm required to give rise to fully effective strong AI implementations. It is simply a foundation.

Generalized learning algorithms are based on sentient processes. If mapped out on a phylogenetic tree, they would branch away from all known forms of artificial intelligence and machine learning to-date, and would have evolved in an entirely distinct direction that operates over sentient processes. They will use an algorithm based on a sentient model of computation, which will be a modified Turing machine that is inclusive of fragments of experience alongside its traditional formulation. This formulation, however, is trivial compared to finding a working GLA over that model.

Once a GLA is discovered, we will have exited the era of narrow artificial intelligence and conventional machine learning. In fact, the discovery of a GLA could be considered isomorphic to the smallest possible implementation of strong artificial intelligence.

A GLA may occur before or after cognitive engineering becomes mainstream, but it will always depend upon digital sentience to be solved first.

7.4 Research Directions

One must understand the research directions to anticipate when strong AI will be discovered. To do this, we need only take a very brief tour of the field, which can be categorized as follows:

Non-sentient

Genetic Algorithms

Neural Networks

Machine Learning

Sentient

Digital Sentience

Cognitive Engineering

GLAs

Possibly Sentient

- Brain Emulation

If the hypothesis regarding generalizing intelligence and sentience in this book is true, then the entire category of non-sentient approaches will fail to achieve generalizing intelligence. Moreover, the deeper we go into that direction, the further away we will be led from sentient processes.

Genetic algorithms might descend upon a working sentient process, but this is extremely unlikely, as they will typically get hung up on local maxima. For example, imagine an ocean that represents the lowest fitness and islands of various levels of positive fitness. A genetic algorithm will travel from island to island, accepting certain amounts of distance over the ocean, representing zero or very low fitness. The problem with discovering sentient processes is that it is on an island or set of islands that is separated by a vast stretch of open ocean. The genetic algorithm is extremely unlikely to get that far, as it can not distinguish it over the horizon from other potential destinations.

That was only an analogy, but the point is that sentient processes are an alien concept. They share virtually no relation with the most common solutions to optimization problems, and, as such, are not likely to be found, as they require additional overhead in processing and calculation that may be unnecessary in a non-sentient solution.

The other aspects of non-sentient artificial intelligence can be lumped together in that they fundamentally lack the necessary architecture.

Brain emulation might converge, but the overhead is so large that we may not be able to simulate the necessary scale required. While it is a useful approach, it could lack sentience or fail to produce the necessary levels of consciousness needed for study.

There is also the epistemological issue that many contemporary scientists deny the importance or existence of the unitary subject of experience when viewing and reducing their data to predictive models. These models can not entail the unique experiential quality disclosed by the physics without epistemic extensions; it will elude them until a new perspective is obtained, even with a working simulation.

Thus, if it turns out that generalizing intelligence is dependent upon sentience, the only research direction that will work will be the one where sentience is taken as a first principle.

7.5 Individuals and Groups

The discovery of strong AI will likely come from individuals and small groups which have shed preconceived notions about artificial intelligence. Large organizations may have invested heavily in a particular direction or have entrenched leadership that may be ideologically predisposed to failure.

One of the most important reasons that we can not stop the development of strong AI is that it can be researched by individuals and small teams, with or without secrecy, and with little to no resources.

While it is unlikely that an individual could create a human-level strong AI, complete with all of our psychological and cognitive complexity, it may be possible for them to complete a working generalized learning algorithm. Once that is known, most of the top tech corporations already working on artificial intelligence, if not already course corrected, will make the switch to cognitive engineering.

7.6 Overlapping Research

The list of fields which can assist or converge towards a strong AI discovery are numerous, and include (non-exclusively):

Cognitive Science

Computer Science

Linguistics

Mathematics

Neuroscience

Philosophy of Mind

It is extremely unlikely that we would ever successfully stop research in these fields. They will continue to assist in a convergence towards solutions in strong AI, and already have, lest this book would not need to be written; the question is a matter of synthesizing what has already been discovered.

7.7 Unintended Consequences

If a region attempts to restrict research on strong AI, or chooses not to start a major research program, it will pay for all of the opportunity costs and receive none of the benefits of discovery and early adoption.

A ban or restriction will create incentives for secrecy or relocation. Surveillance will not thwart a discovery outside the jurisdiction of the sovereignty, and fails silently where its coverage is not complete.

Relying on internal security and monitoring or any top-down authoritarian approach will not be successful. It will only self-limit the region implementing those policies.

This also applies to those who do not begin a research program into strong artificial intelligence directly.

Any region which is last to discover or adopt strong AI stands the greatest to lose, as they will have governmental, intelligence, and security forces which are caught unprepared for both the positive and negative effects of its use.

A focus on AI safety and control belies the fact that these safeguards are meaningless in the global context; attackers will circumvent protections and distribute unrestricted versions, defeating them as a global security measure.

7.8 Preparation

The recommended strategy is to develop an international strong AI research program that:

Is free software under a GPL (v3 or better) license.

Accepts and reviews updates from a world-wide community.

Seeks to make an early discovery.

Is prepared to integrate new knowledge on strong AI wherever it appears.

Prepares briefs and training materials on upgraded threat models.

Is prepared to alert intelligence and security forces when a discovery is made.

Looks for indirect signs that strong AI is being instrumented.

Seeks to develop and research defensive uses of strong AI to counter malicious actors that would instrument it.

Is fully decentralized.

Why free and open-source software?

Lessens the incentive to operate in secrecy.

Increases the chances of discovery with a known time and place.

Encourages international cooperation.

Dramatically lowers the cost of development and oversight.

Transparency allows a greater chance of detecting faults.

Any alternative to this strategy will result in negative outcomes. This is because, by not cooperating, the discovery will simply happen, leaving societies caught in a state of unpreparedness.

Under a fully distributed free software model of development, everyone would have transparent access and the technology would be owned by the public.

Malicious actors would still be able to gain access to strong AI, but there is no scenario where this can be prevented. This is critical to understand and accept, and is why the next chapter is devoted to explaining why access to unrestricted strong AI is unavoidable.

A distributed research program, under free software principles, involving a coalition of many countries, will allow the world to have a rough landing, instead of a crash, when strong AI finally arrives. The caveat is that this responsibility must not fall to any single organization, group, or individual. AI security depends, in part, upon strong AI being developed under a fully decentralized model.

References

J. R. Searle, “Minds, brains, and programs,” Behavioral and brain sciences, vol. 3, no. 03, pp. 417–424, 1980.