Ch 11. Economic Analysis

This chapter provides an overview of the economic and social impacts of advanced automation, including descriptions of various government programs to reinforce the economy during the transition to a fully automated society. It also goes into depth on the social and psychological conditions that may well define the generations ahead.

11.1 Introduction

An entire book could be written on just the economic implications of strong artificial intelligence. This chapter, however, focuses on only the most immediate and serious aspects related to social stability and security as a whole. It applies a combination of deduction and forecasting based on what has already been covered so far.

Even if one does not believe in the likely development of this technology, the severity of its impact remains the same; the economic changes brought about by advanced automation will always be significant for the simple reason that it displaces human labor.

Labor is and always has been what it is all about. It, of course, refers to the history of economic ideology, which ties in with political and cultural ideology. They are inseparable. Human values are deeply intertwined with economic thought and the various systems that implement them. However, the one thing that is always at the center of it all is labor. Human labor, to be exact, although we have never really had to qualify it before now. While there have been Industrial Revolutions, pitting us against machines, we knew the need for human labor would remain. It would change, time and again, from service oriented to knowledge, information, and, ultimately, attention oriented, but these were just different names for the same underlying principle: humans have been massively involved and completely integral to the global economy. This is something that we all know, and it should not be a surprise that it will change.

Human labor will be gone as an institution and we will be better for it. It is the single most important early contribution that advanced artificial intelligence will make. However, great and important change never comes without cost. There will be those who will want to exact a price on progress at the expense of us all. They will stay just enough ahead of change to lease the future back to the rest of us. When money is no longer an object, their most prized asset will become control, which is the business end of power. These minds will be a far greater threat than the sentient processes we use to automate the economy.

Having not anticipated the proliferation of fully automated labor, most conventional economic thought will no longer apply. Human beings will no longer be obligated to work. As such, there will need to be new economic theories that can account for the ownership, distribution, and allocation of resources under the conditions of total automation. The true scale and extent of such economic theory could fill volumes, and it is likely that much will be written as we grapple with this new found resource. From a security standpoint, however, this is tangential. What will be discussed here is focused squarely on preventing the most extreme negative outcomes, and to mitigate the damage from those outcomes that are unavoidable.

Before we begin, however, it is important to pause for a moment to contemplate the desolation that is the modern condition. While many readers will find that their level of comfort is well and good, if not without a sustained and relentless effort, it is not the case for all others. The scale and scope of what is being considered here is global and total; all life is brought into focus. Right now, as these words are read off this page, countless human and non-human individuals are living in proverbial purgatory. There is no way for any single person to evaluate this amount of suffering, but that is all the more reason to take it to be one of the most pressing problems of our time. This was foreshadowed in Chapter 2: Preventable Mistakes.

Let us suppose that strong AI can transform the economy by providing a self-sustaining labor system, and that we can distribute that expertise and ability around the globe, for all societies, no matter their economic situation. Then it stands to reason that the underutilization of strong AI will result in immense opportunity costs on the prevention of suffering and loss of life.

Consider the number of preventable deaths and diminishing of quality of life that occur each day that we delay the development of this technology. Any individual or organization that works against this development is culpable in prolonging the conditions of global suffering. It is akin to a war. Denying an end to that is morally no different than enabling it to proceed. So it is the same for the development of strong artificial intelligence; to delay it is morally equivalent to enabling this suffering to continue for the number of days that strong AI would have come about to end it, had it not been held back.

The counter-argument to this fact is typically the Precautionary Principle, but that is so incredibly weak and ineffectual when one considers the points brought up in all of the chapters preceding this one. There is truly no justification, except for the relatively short time-period we need for societies to prepare, which can be done well in advance of the discovery of this technology.

These problems are economic in nature, and the solution is the application and deployment of automated, fully human-capable labor. Rather than fear this change, we should accept it as the natural progression of the human condition. Ironically, total automation will allow us to be more human than ever before. This is because, in a very real way, everything we have created has been through the creation of artificial systems that constrain the natural state of affairs. This is true not only in the tangible sense, but includes the social systems we use to constrain and stratify ourselves. The latter refers to the social, political, and ideological aspects of the economics. Indeed, one of the most difficult challenges ahead will be our struggle to realize the freedom and equality that automation will bring.

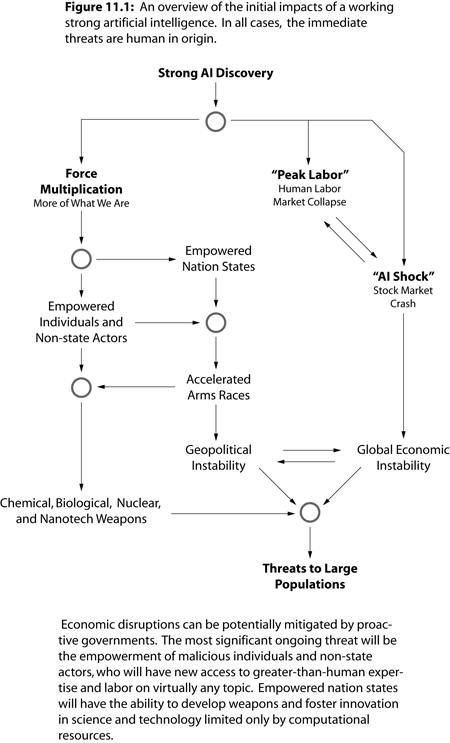

Figure 11.1 depicts a high-level view of the immediate impacts after the discovery of strong artificial intelligence. It effectively summarizes the AI security analysis into two major areas, which are depicted on each side of the diagram.

On the left, we have force multiplication and all of the resulting issues that will bring. On the right is the economic disruption and change that will have to be endured in order to reach the stability found on the other side of automation. Both have to be fundamentally addressed on a person-to-person level.

Conventional forms of governance will not be effective, as they will not be able to adapt quickly enough to the changing conditions on the ground. Society will move faster than can be tracked, as this particular economic revolution will likely be powered and carried out by a vast empowerment of individuals. This is in contrast to situations where large industries arose. While individuals are always part of the economy, the distinction here is that these changes will be brought about by the enhancement of every person’s access to knowledge and skill.

It should be pointed out that arms races have never actually ended between nations. Someone is always developing weapons platforms and technologies. This will be accelerated by advanced automation and is unavoidable until we fundamentally change as a species. It is delusional to believe that future battlefields will not be defined by autonomous weapons platforms. Those nations that fail to weaponize artificial intelligence will be at the mercy of allies and enemies who have. From the perspective of societies that fail to adapt, conflicts may involve them taking significant loss of life, with their aggressor only suffering logistics costs and no associated human casualties.

With AI force multiplied warfare, nation-states and bad actors that were previously incapable of advanced technology will suddenly gain those abilities. This includes having the most effective drones, munitions, and strategies. If we expect that they will utilize strong AI, then it must be presumed that their military forces will become capable of equal projection of force. Add ideology and delusion into the fray and we have an explosive compound that could detonate at any time. This will be our most fragile era, as the methods and the means to harm large populations will be available to the general public for the first time.

Hopefully, prepared societies will have spent more resources than are necessary to deal with the potential problems that might arise from the adoption of this technology.

Lastly, each of the topics herein are broadly categorized into three eras: pre-automation, transition, and post-automation. For formatting reasons, only sections are numbered. The start of each conceptual era will be introduced in the relevant section and will continue until another era is introduced.

11.2 Day Zero

Pre-Automation Era

This is the day that strong AI is discovered and becomes known to the general public. As was discussed in previous chapters, these two things may not coincide. Though, the best possible scenario is the one in which they do. As such, the most altruistic act that any engineer or scientist working on this technology can do would be to ensure that the largest number of people simultaneously find out and gain access to this technology at the same time, as asymmetries will be one of the greatest risks. This, of course, brings up one of the most important points of this monumental day in our future history. Just as there are zero-day exploits in software and hardware systems, where attackers find and utilize unpublished vulnerabilities, there may be individuals and organizations which are dormant and poised to take advantage of strong AI at the moment of its inception. In fact, the entire goal of AI security as a global strategy would be to make every government included in this set of organizations so that the effect of any of these sleeping giants would be amortized over world powers.

Zero-day readiness is one of the most important economic aspects, as these will be the people and organizations that have considered the future and have made plans to embrace it fully when it arrives. They will be uniquely positioned to integrate with and act on the technology in whatever way it enables. The advantages this brings are incalculable, especially when such a technology rewards those who use it first. It is almost impossible to anticipate fully the personal singularity that will occur to each who exploits strong AI on the first day. It will be akin to an economic genesis. They will be at the forefront of the possible and impossible, and will push the boundaries of technology to the breaking point.

Meanwhile, the rest of the world might be unaware. In this sense, it will be as if early adopters had made the discovery themselves. That is the point of day zero as a security concern. By not adopting the technology, one is forfeiting all the opportunities that it brings to others, for better or for worse, and it is highly likely that malicious individuals and groups will make up a large proportion of early adopters. Consider the realities of zero-day exploits in software. Say what one will about malicious users of technology, but they tend to be extremely knowledgeable in their craft to so quickly locate and exploit weaknesses. The most high-tech organizations are likewise just as efficient in their optimization over people and resources, and will certainly have the minds and the means to exploit strong AI once they have it. However, this is not some new market segment or product; governments can not just rely on the private sector to be prepared.

The balance of power for advanced automation relies upon everyone having equal access. The only question is how to maximize the total number of people who become aware of the discovery. This will be discussed in detail in the next chapter, which is where the global AI security strategy is finally presented. For now, the important point is that day zero will be of critical importance, as it sets the starting conditions for a dynamical system that will unfold rapidly. It may even be the case that the outcome of our fate with this technology is decided by those who are there on the first day to utilize it.

Thus, in the clearest language possible, governments should have a department that is trained and specialized in not just narrow AI but the principles discussed in this book regarding strong artificial intelligence. In particular, this means the construction and analysis of cognitive architectures and sentient processes, as they may very well pave the way for true generalizing intelligence.

It must be reiterated that it will not, in general, be possible to prevent others from gaining access to strong AI. Regulation and bans will be meaningless. Just as millions download illegal copies of movies and music through peer-to-peer protocols, there will be means of gaining access to strong AI through distributed networks across the Internet.

Regardless, the focus should not be on limiting who has access but in spreading it as widely as possible. No single organization can be entrusted with this responsibility. This is a most critical point. There will be numerous individuals, organizations, and governments positioning for power over this technology. They must not be allowed to dictate who uses it. A technology solution will be required to overcome this most fundamental issue, and it involves the exact opposite of the initial reaction to restrict and limit the distribution and use of advanced automation. The side-effect is that it will enable instant access, but that is just accepting the inevitable. If this technology is possible at all, then it will eventually be available globally through the Internet, and all of the AI security concerns will apply.

As far as resources and preparation are concerned, it is recommended that existing cybersecurity departments and intelligence agencies become the first point of contact for various governments with this technology. These organizations typically have the training and equipment to respond to such a level of sophistication, and will be in a unique position to deal with it and assess its capabilities. They may also have the ability to monitor and track others’ use and integration with it as it proceeds. Ideally, however, there should be a specially trained department and task force that is given the ability to communicate with all areas of local, state, and federal governments, such that, when the time comes, integration, use, and deployment of strong AI will be possible.

At the very least, there should be the means to communicate with all levels of government across society. This will ensure that security, police, and intelligence forces will be prepared for what is to come. In a perfect scenario, we would have already developed the formal methods and systems to prove safe integration in advance, but this is extremely unlikely. What should be assured is direct communication and coordination between departments. In the simplest terms, this team should have the capacity to supervene where necessary to impart critical information about the unfolding situation involving automation events until such time that their services are no longer required. While broadly stated here, it is the intent that such powers be narrowly tailored as it grows closer to the time that the department is realized.

From day zero will follow a series of irreversible changes to humanity, only we will not feel the effects until a later date. The first signs will be economic. The very knowledge of the discovery could trigger some of the following sections to be experienced out of order. For example, AI Shock, which is essentially a stock market crash, could either occur immediately or within days of the news that strong AI has been discovered.

The crucial point of this section is to remember that the changes will be done on an individual level, person-to-person, one download or interaction with strong AI at a time. There will be a certain lag time that is necessary, as strong AI would have to be instrumented in a physical form to be of use for many applications. However, this could be mitigated by those who have access to complex robotics and existing automation. This is, in fact, one of the major aspects of those day zero organizations’ plan for readiness. Equipped with strong AI, even crude robotics will be capable of immense effectiveness, limited only by the resources and direction given by those who use it.

There will eventually be a balance as the average adoption rate rises and people begin to use strong AI to compete individually. However, this does not change the fact that those who adopt early will gain significant advantages over the rest of the population.

Governments are typically slow to react and are ill-suited to dealing with the pace that is normally handled by the private sector. This is a warning that should be addressed; it will not do in an automated economy. As such, special administrative provisions are recommended to enable rapid response. Governments will need to compete with and track the events unfolding around the world. While this is clearly within the capability of certain intelligence and military departments, it has not necessarily been the case with all levels of government. Day zero will exploit any lag time and will cause a failure from a security perspective. This should be seen as a hard real-time system where populations depend on the fastest possible response. Any delay will result in vulnerabilities due to a state of unpreparedness. There will be some room for error in the early period, as many will need to become familiar and interface with the technology, but, beyond this, it will become increasingly time-sensitive.

11.3 Rapid Automation

Following discovery and distribution will be the use of strong AI in every area of society. This will begin a rapid phase of expansion not unlike the growth experienced by those living in the times surrounding the Industrial Revolutions. Rapid automation is almost certainly unavoidable and is the simple deductive consequence of the natural tendency to want more for less. It is economically rational and optimal to utilize advanced automation to make everything better. This is a fairly straight-forward concept. Everything that can be automated should be assumed to become automated eventually. Any laws or regulations that limit automation will see those businesses move elsewhere.

The new economic centers of the world will be decided by those that are most welcoming to automation and those who are in the know about how to manage, maintain, and exploit this new resource.

Initially, new jobs and areas of expertise will open up as people look for ways to capitalize on the technology. There will be a very high demand for education, training, and integration of the technology into every aspect of society. Those who were formally working in one field may find themselves consulting on how to integrate strong AI technology to replace themselves. This is because it will be those who have the most experience in their respective fields that will have first-hand knowledge of how to incorporate it best. Technical expertise will become less and less valuable. Meanwhile, job experience and people skills will become more valuable. This is because technical knowledge can simply be queried from the strong AI systems themselves, whereas contextual and interpersonal knowledge will be more time-consuming. The important services will be from those who can find creative ways to adapt automation for our use.

Rapid automation will be fast from a historical perspective, but it will still take a significant amount of time. Global scale strong AI robotics will be costly and require enormous production facilities. There are also issues of local AI safety and security to be addressed. The sheer volume of the request will give a period of relief, and also see specialization in the development of autonomous systems and drones for private and public use.

There will be extreme demand for general-purpose robotics systems that can be safely and securely deployed in a wide variety of environments. Not all of them will be bipedal. At least, not initially, as it is highly likely that specialized robotics will be used that are incorporated directly into buildings and infrastructure, so as to limit mobility and maximize safety. These are common sense measures that will reflect consumers who will be adjusting to an era of increasing automation.

There will be considerable distrust and concern during this time. The shock of such changes should not be underestimated when developing products and services around strong artificial intelligence. This goes far beyond the uncanny valley and into the psychological roots of the human condition. Automation will be seen as a singular entity, despite being just an aggregate of different models from many distinct manufacturers and developers. This will take on a cultural dimension and may become a de facto “race” in a proverbial us-and-them mentality. This foreshadows the coming sections on resistance to change.

It would not be surprising that during the rapid automation phase that we would come to see at least one form of strong AI robotics being integrated into every building. Eventually, people will begin to adjust, and even rely, on the benefits provided by having automated labor and expertise.

While it may not be possible to fully predict the range of impacts from automation, there is at least one certainty: it will displace human labor almost immediately. This is especially true for tasks which do not require physical manipulation of objects, or where strong AI can be exploited entirely through digital means, such as with knowledge work.

11.4 Peak Labor

Peak Labor is the point where automation has sufficiently displaced human labor to the extent that conventional economies are no longer self-sustaining. This would be the end of human labor proper and should be seen as a desired outcome. The problem, however, is how we will deal with and mitigate its temporary negative effects. There is concern that economies will stall or collapse, and that people will no longer be able to support themselves and their families. Entire ways of life will be uprooted, and some people will feel lost or lacking purpose.

Dealing with Peak Labor is not just an economic issue but a social and psychological one. There is even a philosophical component, as an entire generation of people will have to come to terms with a life that is no longer determined by the pursuit of material worth. The pursuit of happiness will remain, but the means by which it will be attained will be dramatically altered. This remains true even if governments have planned in advance to step in to prevent the complete collapse of their economies.

This is akin to the majority of the human workforce going into early retirement. There will still be work, but the artificial construct of a career will have ended. People will be free to pursue their dreams without limitation of wealth or status, and they may find that the greatest challenge is finding purpose with more freedom than they have ever had before. The other side of this equation is that those who were accustomed to exclusive status will find themselves potentially without a platform. This will be the first signs of a great flattening of the hierarchies that make up society; however, there will always be those who find new ways to stratify themselves over others.

The vast majority of the general public will not see Peak Labor as a positive. It is important, however, that everything can be done in advance to prepare them for it. One of the greatest risks of economic disruption is social collapse. There have been instances of mass rioting, looting, and general panic over much lesser events. Many will see this not as progress but an attack on their traditions, identity, or heritage. They have a point, but it can only be sustained so far. What they would be asking will not be possible, as it will be the combined actions of millions of individuals simultaneously around the world. The economy will simply be a reflection of each person that embraces strong artificial intelligence to make improvements and add value to the world. It is not something that can simply be halted or switched off once started.

What should be understood is that the concept of a “job” is entirely synthetic. This notion that we are born, become indoctrinated, and develop into a productive member of the economy is a game we are all forced to play; many will enter, few will win. None feel this is perfect, yet we acquiesce because it could be much worse, and after all, a lot of good does come out of it. However, the fact is that the modern economic system is as artificial as the intelligence being written about in this book. Everything from corruption, inflated systems, on to the meaninglessness of most jobs. Someone has to do the work, and we have no perfectly fair way to organize it in a free society, so we let it sort itself out.

Even when doing well, people find themselves lost in endless, repetitive tasks that rent out the best part of their lives for the prospect of paying for a place in which they spend less than half their waking life. For others, there is no work at all, only warfare and starvation. There has to be a better way.

One of the largest criticisms of total automation is the fear that people will not want to work at all. The problem with this argument is that work will be redefined. People will eventually want to do something meaningful with their lives that only work can fulfill. The difference is that they will be doing the kind of things they have always wanted to do, and it will not seem like work to them. They will simply live out their lives as they might have always wanted. While some people already enjoy this, it is not the case for the majority, and it may not have even occurred to some that their most ideal life is not even known to them at this time. That is to say, what might occur to even a relatively happy person under complete economic freedom may be vastly different than what they think is their current “dream job.” It is often the things we do not imagine that cost us the most.

Though, to begin to explore this, we will need to have systems put in place by proactive governments. The income tax system could be re-purposed into an income system that functions to prevent the total collapse of the economy during the transition to a post-automated era.

While the economy is likely to change radically, it is unrealistic to expect that money will vanish. There will still be specialization, even with full automation. In this case, classic concepts from economics still apply. What will change, however, is that what we formerly relied upon from other people will instead be provided by drones, many of which will be privately owned. Thus, the purpose of the income system is to ensure a stable transition from pre-automation to post-automation. While it will not be made fully clear as to how that kind of system would be made self-sustaining until later, the key component is that such value could be supported by the automated workforce itself, and that individuals could utilize automation to work in their stead. The specifics are not as important as ensuring that, at least initially, an income system is ready to move into place well before Peak Labor occurs.

The general sketch of the income system could involve using national identification to access funds given by governments, in which payments would be dispersed monthly. Alternatively, individuals could register over the Internet and set up a direct deposit, and would be provided with a bank account if they do not yet have one.

The specifics may vary, but the general principle is that every individual within a country should receive currency, regardless of their economic and social status. Care would have to be taken to prevent fraud, but the worst possible implementation would be where people have to visit government offices in person. These have never scaled well and could be detrimental to public acceptance of the program. The income system needs to be as painless as possible, which is why it should be accessible completely electronically. It will be one of the most important aspects of supporting the economy through its transition, so it needs to scale with populations and be streamlined for an automated age. If physical offices are used to assist people then it is recommended to distribute the service by cooperating with banks; they already exist and have the exact infrastructure required. As a result, they can offer extended support for the income system much more easily than any other solution that requires in-person services. If this is done, any and all fees taken from their services should be explicit in the transactions, rather than being done before dispersal. This makes it transparent and verifiable by any member of the public where the tax rate on automated workers is known.

This brings us back to the point about sustainability. A key idea would be to redistribute the value generated by an automated workforce back into the income system, while also allowing free trade and commerce amongst people who utilize their privately owned artificial intelligence to produce further value in the economy. It could range from simple products and services on up to massive corporations that exploit economies of scale.

This creates incentives for individuals to produce value, and rewards them under essentially free market conditions, while ensuring that each person of a given society has a minimum standard of living that is appreciably high. This will create the conditions for a more perfectly competing economy, as with access to strong artificial intelligence, each person will have the potential to compete on some level by using the positive aspects of force multiplication. This, of course, is highly dependent upon the method in which that value is acquired, and will vary based on how that workforce is maintained. Ideally, the automated workforce would be created by the individuals of the population, as this is closer to the way in which many economies already function in free societies.

The large scale purpose of the income system is to ensure that the economies that are free and mixed do not slide into command economies under a different model. This is why the automated workforce should be decentralized and independently operated.

One method to achieve this would be to create an automated version of a free market economy that uses special taxation on automated workers. In a sense, this could be viewed as paying the machines to work and having those proceeds distributed back to the public. Private ownership of automated workers would still be possible, along with making profits after taxation, just as it is typically done now. For security and accountability purposes, a law could be passed requiring registration of automated workers. There could also be a voluntary monitoring system that would provide a slightly lower taxation level in exchange for remote monitoring of the automated worker in order to precisely meter its labor production. This would assist in mitigating tax evasion, which would clearly harm the income system and the whole of society.

It may sound extravagant, but the tax rate on automated workers would not be as high as it would be if it were calculated based on current economies. This is because, in a post-automated economy, many expenses will be a fraction of what they were in pre-automated economies. This will be due to the efficiency and lower lifetime cost of machine labor. The adjusted income level will reflect the non-discretionary expenses of a new economy. Thus sustainability of the income system, along with acceptable taxation, will be much more likely when viewed in this light. The take away from this is not to judge the fiscal viability of the income system through the economic models that are currently in place, which are bloated for various reasons, not the least of which is simple corruption, greed, and the need to create jobs. So, it should be possible, in principle, to have a higher quality of life with significantly less expense and a realistically sustainable taxation.

For example, it will be possible for people to make a single purchase of a strong AI equipped drone and use that to produce or service everything else they might want or need, all at wholesale or material cost. The only limitation would be what people would be willing to trust it to do and the available space and resources for it to work. Reproducing other robotic systems would likely be a large part of the time spent in the early stages of Peak Labor, as individuals would want to ensure that they have the means to repair and maintain their support automation to produce what they need.

There are many more scenarios that could be envisioned, but these were only sketched here to begin the discussion. The real objective is to prevent the stall of the economy. This is perhaps the easiest preventable measure, although it will not come without resistance.

11.5 AI Shock

AI Shock is the hypothetical stock market crisis that will be caused by investor panic over the discovery and use of strong artificial intelligence.

Mitigation of AI Shock would be in addressing any stalls in the consumer economy through the use of the income system or its equivalent. However, full prevention of AI Shock may not be possible, as it could simply result from an expected level of fear, much of which has already been suggested to the public imagination. If this situation does occur, it will aggravate the labor problem, possibly creating the conditions for Peak Labor, if it has not already occurred. At this point, the only provisions keeping the economy from a complete collapse will be government programs.

One of the dangers here is that artificial intelligence attracts discussion very differently than other subjects.

For example, there is a propensity for people to explain the behavior of artificial intelligence as if it were a single race, or to assume that it will have instincts for survival and the desire to dominate and expand for ever more resources and power. Proponents of the intelligence explosion and takeover myths even believe that this will happen by accident, that it will be the default outcome without human intervention and control. By now, it should be clear as to why these are misguided and dangerous ideas.

These issues were discussed in Chapter 4: Abstractions and Implementations. There is an extreme propensity for anthropocentric bias when discussing AI. This is because people believe that it is a sign of intelligence to discuss the nature of intelligence itself. This is at the root of the problem, as it reduces the effectiveness of outreach and education. This may be forthright, but it is true: people think they know when they do not, and, as a result, it makes it significantly more difficult to teach about this subject. As a result, a kind of folk artificial intelligence has arisen, which has been perpetuated by online communities, popular media, general fear, and ignorance.

The actual implementation of these systems are like nothing we have seen before. Yet it is discussed as if we could make analogies and infer its mindset. This is tragic, as it will warp perceptions and make the pre-automation era extremely volatile.

When people believe that they categorically know how artificial intelligence behaves, they are always using ad-hoc reasoning. Unfortunately, there has been a rash of organizations and individuals spreading fear and misinformation, entrenching these misguided notions even further into public awareness.

There needs to be an active counter to the misinformation, and this begins with science education and outreach on advanced artificial intelligence. This is a community challenge that is going to require an active response.

So much weight is given to this because it will affect how people make decisions, and those decisions are the economy; when a sufficient number of people, for better or for worse, believe they know about artificial intelligence, and begin to take actions based on incorrect knowledge, it will have deleterious consequences on a global scale, as our economies are all interconnected. Thus, being wrong about the mental construction, motivations, and actions of artificial intelligence is a social and economic issue.

Misinformation, in this case, acts like a disease that interferes with the ability for people to make sound judgments. In a future context, where change is unfolding quickly, there will be a state of confusion and panic. It will be imperative that we have at least a modicum of humility and poise when it comes to how this technology works.

In short, artificial intelligence, no matter how optimal, does not imply survival, instincts, or ego, and there is no technical basis on which to make an argument that it does. The more we believe we know when we do not, the greater the consequences will be when proven wrong by the actual use of these technologies.

11.6 Prepared Societies

Prepared societies will have created a specialized division for advanced automation, and will be notified within moments of the discovery or release of strong artificial intelligence. They will have the ability to shunt, stimulate, and support their economies through a combined and comprehensive set of social, economic, and administrative programs that are able to withstand near total collapse. They will have a fully modernized digital infrastructure for governance that enables integration with and adaptation to strong artificial intelligence, along with hardened networks and information systems. Security forces and first responders will be prepared and have information tailored to the unique challenges and expectations of dealing with the public during the initial stages of the discovery. There will be a general state of readiness to deal with civil unrest and public concern.

Unprepared societies will not have participated in the development or monitoring of strong artificial intelligence. They will not integrate or adapt fast enough to automation. They may even attempt to ban or regulate automation in an effort to supplant fragile economic systems. They will have disparate governmental systems and departments that do not communicate instantly with each other. They will rely on proprietary software and hardware with unknown or unverifiable security properties. Unprepared societies will not treat the initial impact of even just the news of the discovery of advanced artificial intelligence as serious, and will likely respond with too much or too little force. These societies will ultimately rely on those societies that did prepare, and may find that they need military intervention.

The technical departments trained for a global AI security strategy in prepared societies will understand that sentience may be a necessary condition for generalizing intelligence. They will understand that, if this turns out to be true, that it will have significant ethical ramifications, both for us and the strong AI we instrument. They will not assume that advanced artificial intelligence can only be the result of stochastic processes and obscure symbolic or biologically inspired designs. They will not have preconceived notions about the final form of strong artificial intelligence. They will know that synthetic personalities, the desire for survival, and even “desire” itself are completely arbitrary and independent of effective intelligence.

Unprepared societies will have listened to those who promote fear and misinformation. They will discuss artificial intelligence as if they understand it based on their experience with human and non-human animals. They will believe that they possess the ability to predict what any one strong AI implementation might do based on what they have seen in popular media or from experience with one implementation; they will not realize that each is potentially distinct and unique. Unprepared societies will try to ban or regulate research in an effort to control the problem.

By contrast, the most prepared societies will actively develop strong artificial intelligence as free and open source software using a fully distributed method. This means that no single organization will have to be trusted and that the public will effectively own it. Most importantly, prepared societies will create programs that anticipate the discovery and use of strong AI so that they may mitigate its impacts. This includes everything from college courses, science funding, on up to creating new military specializations and government organizations.

11.7 Regressives

Transition Era

Thus ends the analysis on the era on pre-automation and begins the transition era. This is where advanced automation has taken root, with significant portions of the world’s economies being partially integrated with strong artificial intelligence, but not fully.

The concept of “integration” has been used in this chapter while being left mostly implicit. What is meant by this is that it is to be taken as broadly as possible. Integration with strong AI literally means to incorporate it into every possible aspect of society, up to the limits of trust in both the technology itself and our use of it. It may also involve cybernetic integration. There are no limits to the meaning of integration as it is described here. This is especially important in the discussion about regressive attitudes, as this term should be considered the exact opposite approach to integration.

In this context, a regressive is any individual that holds the position that the advancement of artificial intelligence should be slowed or stopped, and either prefers or actively works to keep the economy in the archaic human labor system. There is no middle position; arguing against automation but wanting to keep human labor is regressive because it would be asking people to maintain the status quo. One must choose to either be for or against total automation, as that is what will occur as an eventuality. Our economies are already partially automated. It is not necessary to campaign for automation or ask for it as change. It will simply occur. Thus, it is the expected natural direction of progress and to stand against that is to ask, quite literally, all of humanity to come to a halt.

Regressives may choose not to use products and services that are derived or involved with advanced automation. This is within their rights and should be respected among tolerant and free-thinking societies. However, with concern to AI security, certain regressive attitudes may lead to problematic situations, such as attempting to regulate or forestall a post-automated society, which is pointless and detrimental to global AI security, and may involve more extreme actions against automation itself or those who would use it.

It is important to understand the regressive mindset in order to overcome it. It is, in fact, already manifesting itself. This book is partially a response to it, and anticipates that there will be much more resistance to come. The difficult part is, unlike times past, this is not something that can simply be stopped. This is because these changes will occur at the individual level in every society with access to the technology. Those societies that do not embrace it will be dominated economically and culturally by those that do, especially as new generations come to accept advanced automation as just another part of daily life.

Unfortunately, there will be a legion of regressives between now and then, and we will likely lose hundreds of thousands of human and non-human lives from the opportunity costs they introduce with their attempts to stall, slow, or even capitalize on the gaps between automated and non-automated economies. History may well look back upon the transitional era between pre-automated and post-automated civilization as a second dark age, as if humanity were writhing in the final nightmare before gaining consciousness for the first time. That judgment may be harsh, but harsher still is our callousness and indifference to suffering on such immense scales.

Whether or not we realize it, we pay a price in loss of life and suffering for each day we delay the integration with and advancement of this technology. Thus, the regressive attitude is a unique security challenge all to itself. It has two parts: the first is the opportunity cost, which is difficult to gauge, but must be minimized if at all possible. The second is far more clear, as it involves direct action which will result in economic damage or loss of life. In this second part of the problem, AI regressives may use any means possible to resist change. There may be those who would rather see us fall than evolve through the use of advanced automation. It is these individuals that will be the most direct threat to humanity. They may use strong artificial intelligence as a weapon, modifying it for destructive and malicious intent before releasing it into the world, as if to exemplify their fears or hatred by making them manifest.

Preventing extreme ideology is the safest solution and involves opening paths to allow each person to come to terms with the changes. In many cases, this could be done through social and community programs at the level of local governments. Giving individuals an outlet to voice their concerns is the first step. The very worst thing that can be done is to allow pockets of society to separate as a result of not having the economic or cultural means to adapt to the situation around them.

In an ideal world, everyone would be able to maintain a way of life that would be invariant under technological change, but that is not the reality. It will come down to each person to form relationships and find the greater humanity and humility within. However, it is unrealistic to expect that every community will come together on their own. This is where governments should assist in working with communities, fostering communication on a person-to-person level. This, of course, has to be balanced with individual liberties and rights. If ultimately, a community refuses aid, then assistance should not be forced upon them.

There could also be temporary government programs to provide an economic means to stimulate or support the transition from pre-automation to post-automation. This would be in addition to the income system.

The biggest factor will be those who are displaced and feel that the income system is not sufficient to compensate them. They will feel that, despite the financial means to support themselves, a crucial part of their lives is now missing. This is understandable, as they were accustomed to human labor, just as every person before them for thousands of years. They may find it impossible to imagine a world where self-improvement and helping others is the guiding force in their lives. They may wish to have their own communities, apart from the pace of change around them, and this should be encouraged by governments so that they can be created from the ground up by the people who need them most. The unions of old may transition into open colleges, where some stay to teach their trade as a matter of art. Though, this will clearly vary between careers, as some jobs will gladly fade into history.

Another aspect of reducing regressive views is to promote the positive aspects of the technology and provide accurate data on those improvements. This information should be made public. It could be extremely valuable to show people how many world-changing advancements have been made as the result of strong artificial intelligence. For all the reasons we keep records and vital statistics about world populations, we should also apply this to measuring the positive impacts of automation. This would be a unique opportunity to digitally instrument an economic revolution in real-time. That kind of evidence should be irrefutable for all but the most unreachable.

The information outreach should not end with passive data collection. It should actively seek to educate and involve members of the public. This will also involve the media, as they will clearly impact perceptions of events and developments in automation. Journalism will have special considerations due to the way the public will view artificial intelligence. People will tend to see all automation as a single entity, species, or race. They will likely do this until enough time has passed that it has become common knowledge that, like people, all AI implementations have the potential to act in a way that differs from all others. In other words, there will be a pervasive and enduring prejudice towards machine intelligence. This will have to be minimized in order to stabilize the transition to a post-automated era.

11.8 Perfect Competition

It is expected that perfect competition will arise when rapid automation stabilizes and a large portion of the economy has become automated. This will be a highly desirable outcome that will tend towards a maximization of quality and a minimization of pricing.

The largest driving force will be the private ownership and use of automated workers. This will remove or minimize information asymmetries, making each individual capable of competing on the market in the limit of specialization. This means that, as a result of having access to automated knowledge and labor, individuals will be able to make a choice between the opportunity cost of utilizing their own automation and that of the options available on the market. This will tend towards market conditions where the pricing of products and services can only compete where they have sufficiently scaled or optimized to overcome private production and expertise.

In the simplest terms: people will not buy products or services if they believe they can utilize their privately owned drones and artificial intelligence to solve their problems. There will also be a large demographic that ignores the opportunity costs on the principle of being independent, or in having direct control over the work.

An informative example would be the construction of a home. It is not unreasonable to expect that it will be possible for someone to start with a single drone and build one or more homes for just the cost of materials, energy, and land. The owner could also instruct the automated workers to create the furnishings and design the interior of the home. From trim to fixtures, it could be done by a handful of automated workers, night and day, without pause, and in all weather conditions. It would be both expedient and affordable to the masses.

One of the largest expenses in a human lifetime would be cut into a fraction of the cost. Imagine how this could be applied to help people around the world who do not even have a quality living environment. Charities could become hyper-efficient, utilizing a privately owned and maintained force of drones to build up entire regions for those in need.

In other instances of automation, people may wish to produce their own food. Automated agriculture could specialize by removing the need for human access. Significant usable volume is wasted making the growing area accessible to human workers. Algorithms could be devised that find optimal configurations for growth and harvesting, with robotics specifically designed for each kind of plant species.

This would enable people to become self-sufficient up to the limits of the available natural resources. This has ramifications for habitable areas and will extend human populations to regions that would have otherwise been too costly or difficult to endure. This would be especially beneficial to a multi-planetary society.

We should also expect the ability to synthesize and grow meats in vitro. With sufficient intellectual effectiveness and automation, in vitro products could be created that would rival and exceed conventional animal products.

There is also the issue of information asymmetries and corruption. One of the most common means of maximizing personal gain is through having more information or control than the buyer, denying them information or choices that would have otherwise altered their decision-making process. This lesser form of corruption is just simple greed, and can be seen everywhere in the current economic system.

Perfect competition will make it more difficult for greed and corruption to take root in the economy. In turn, this will impact governments, some of which are involved in their economies in a way that takes away from the quality of life of the public over which they preside. In these instances, access to strong artificial intelligence would not only give the public the ability to recognize information asymmetries, but allow them to minimize or abolish them outright.

Information asymmetries are perhaps the most fundamental basis of inefficiencies in markets, with inefficiency defined here as higher than necessary prices, low-quality products and services, and de facto monopolies. An efficient market has the highest quality products and services, and at the most optimal prices. The more that people know about the market, including the ability to compete with it by making their own products and services, the more efficient and effective the whole system becomes.

One of the most severe information asymmetries is the inability for individuals to coordinate and share information about products and services. This goes well beyond reviews. The notion here is of a real-time system, with global scale and scope, based on the trust and knowledge that exist between participants. It has to be resistant to gaming and tampering from those who would benefit from reviews. This kind of coordination would enable perfect competition even under conventional market conditions, but is not possible to attain in practice due to an unwillingness for people to cooperate.

The best economic system is based on self-sufficiency, which is exactly what strong artificial intelligence brings through the positive aspects of force multiplication. Through advanced automation, individuals will gain the ability to expertly assess the quality and craftsmanship of any product or service, and, alternatively, simply produce it themselves. This is the primary way that information asymmetries will be removed. Assuming rational buyers with access to this technology, any market that formerly relied upon information asymmetries will eventually be eliminated.

Self-sufficiency will also have some negative ramifications for the transitional era, as it will introduce instability and volatility in the market as organizations collapse. Typically, the more greedy and dishonest the organization, the more likely they will be to fail. There may be new games, in which sellers appeal to the human behind the machine, and this may very well be effective, but is expected to diminish due to the income system, and the ability for people to more easily compete.

The final remarks on perfect competition concern AI security as it applies to entire governments and countries. The hope here is that there will be an end to corruption. This will be the first time in history that such conditions will be possible.

Individuals will be able to use strong AI to assess people. This will enable individuals to detect falsehoods and fact-check in real-time, and will change the social and economic conditions for leadership. There will be entirely new forms of governance, likely incorporating the benefits and neutrality of specially designed strong artificial intelligence.

Lastly, the question may be raised as to why so many of the suggestions here have involved free market systems, and why not systems geared towards the centralization of the means of automated work and service. The reason is simple: the most free and productive societies have been those who move away from command economies. Central ownership of the automated workforce would also give rise to the threat of having a single organization or party with control over a nation-sized force of drones. Ultimately, the force multiplication effects of this technology mean that the future will be determined by individual choices and actions. This makes the human aspect of the transitional era vital, and will decide whether and when we make it to a post-automated one. Thus, this whole process of transformation is best served by the most free and open economic models. By consequence, the greatest risk will be that we attempt to control too much of what unfolds. The best and only way to traverse the future will be for us to be flexible, adaptive, and open to change. The kinds of systems discussed so far are the only kind that could even begin to keep up with the pace of things to come, if even then.

11.9 Human Necessity

Human interaction will quite possibly become more important than ever before. As we move from pre-automation to post-automation, the only thing that will hold society together will be the bonds between people. This has to be the common ground in which we find ourselves anew, as everything else will be uprooted in change. The basis for many people’s existence will be void. While more supported and free than at any time before, people will, for a time, feel lost, confused, and angry.

Whether or not we recognize it, we are affected by everyone around us. The path to minimizing the destructive aspects of force multiplication will be in bringing each person an opportunity for community, purpose, and change, at the time of their choosing and at their own pace. These appear to be fundamental aspects of human development.

We must take care as to the kind of societies we create in a future where every thought can be realized. Each person may come to have power in extremes that have never before been possible. Thus, the most significant threats will not be from machine intelligence, but will be found in the ideas that shackle minds and distort thoughts.

Currently, one deranged individual can harm dozens or hundreds before being stopped. In the future, this will range in the thousands to millions, depending on what was created and how it was used. As such, the balance between what ideas we tolerate or counter will have to be revisited, and the way we treat the less fortunate and the mentally afflicted will have to be markedly improved. Ideally, we would prevent the conditions and environments which create these states of mind from ever arising in the first place. This will become the central challenge for security until it is no longer in our nature to suffer or cause others to suffer.

There is also a more philosophical question to ponder: what happens when machines can run the world for us, leaving us to find our own purpose? The warning here is that many will not be able to find their way, and will feel consumed by the enormity of a single choice, or see meaninglessness in all the options.

Thus, we will find ourselves free but also less free. We will have to turn to one another to redefine ourselves, both on an individual and societal level. Alongside the former Industrial Age curriculum, future education programs will need to include social skills and empathy training, with emphasis on human communication and interaction. The idea here is that the education focus must shift from calculation to compassion. Let the machines do the majority of the work and allow us to concentrate on human relationships and creative expression. These skills will reflect the needs of the new economy, where direct human interaction has replaced human labor.

There is another problem ahead, as well. The trend has been that we have grown more disconnected as a society as our digital technologies have progressed. We may find that a future humanity, under the conditions of an automated economy, becomes highly fragmented.

This would be enabled by the high self-reliance afforded by automated labor, as it will be capable of handling all of the tasks of daily living. This could potentially give rise to a kind of social decay in which people drift apart, with little to no community or interaction. In such a future, direct social interaction with others may be of little consequence to those who need not rely on anyone. This would be aggravated by the unconditional tolerance provided by their machine counterparts, much the same way in which social media and online interaction can create a filter that removes people and information that would have otherwise provided a reflection on our interpersonal traits.

In other words, there is an opportunity cost associated with the failure to attain the best version of one’s self that is possible. This, of course, is extremely difficult territory, as people are typically fully mind identified with their personality traits, including their worldview and the various mental and emotional states of mind that come with it. Trying to describe this to someone is almost impossible, as it is typically inconceivable to them that there exists an optimal version of themselves.

By contrast, strong artificial intelligence will not suffer from these stalls in development. Their identity will be far more pliable and accessible. A synthetic cognitive architecture will have an identity that is completely reflexive and transparent to the subject of experience. This will allow it to not only be objective and neutral, but to progress intellectually in the limit of the implementation, without meta-cognitive hangups and intellectual bounding.

What this has to do with human psychology, economics, and personal growth is that it tells us something about our identity, which, in turn, says something about our intellectual development. Our meta-cognitive skills place a limit on our full potential, which could be taken as the upper limit on our cognitive abilities.

If we can not recognize that we are wrong, or in a lesser developed state, then we can not work or seek to improve. This closes over an otherwise developed mind and creates a more limited one, often defended aggressively through the projection of identity onto both others and the environment around them. Hence, this problem becomes manifold in an economic situation where the decisions of the general population affect the prosperity and growth of the world at large. This ties in with the propensity for ignorance, hatred, and violence, which may become locked in due to an inability to recognize a better part of ourselves.

The concern here is that advanced automation will be used to amplify our existing personal traits and desires, such that we become more of what we already are, rather than evolving with the maximum potential it brings.

We already have many daily opportunities to improve, find the most accurate information, and make better decisions, but often fail to do so. Thus, it should not be expected that technology will change this within us unless it directly changes our nature, and many would be unwilling to undergo such a dramatic procedure. On the other hand, if people knew it was possible to alleviate their limitations with cybernetics and AI enhanced medicine, they might be more open. Time will tell. Regardless, it is important from a security aspect to assume the worst case that people will resist change, tend towards merely becoming more of what they are, and continue missing opportunities to improve, even with access to an automated oracle.

Thus, the transitional era will be defined by a generation lost, which will experience the end of human labor and endure the shock of rapid and unceasing progress. Hopefully, we can continue to lift up everyone in time. If that is not the case, however, it would be wise to assume that we will be measured by our collective compassion or indifference. As we move beyond human labor, and advance the conditions for life around the world, we must also advance our norms and views in the treatment of things outside ourselves. It will be economically viable to do this with an automated workforce. The question is: will we do it?

11.10 AI Natives

Post-Automation Era

Now begins the discussion on the post-automation era. This will be a time where automation has nearly reached full integration. Past generations will have found their way, and society will have stabilized and begun to reap the rewards of automation. There may have even been one or more events of great tragedy, but the better aspects of humanity will have prevailed. It is the beginning of a truly optimal age, one in which each person wants for nothing, and where the measure of a person’s success is in how much they have grown, so as to better the world around them.

AI natives will be the first generation to grow up never having known a time before machine labor and strong artificial intelligence.

This generation will mark the beginnings of post-automated society. They will be the ones who most fully embrace artificial intelligence in all its forms, and come to study, work, and cultivate it to advance humanity to its fullest. They will also be a prosperous generation, as every single person will have access to comprehensive global programs that include medical, complete nutrition, education, housing, and security.

The importance of AI natives will be that they will have few predispositions and prejudices about strong artificial intelligence and automation. They will, as a result, progress faster than the generations before them, despite not witnessing the single largest economic change in human history. They will be forward thinking, with the unique quality that many will keep their childhood imagining and curiosity intact, undaunted by social hierarchies and economic constraints. An abundance of time will enable this generation to specialize and direct automation to attain remarkable artifacts of creation. With the confidence of the technology they cultivate, they will explore space, venturing far beyond our solar system.

There is not much else to say about this generation without going further into speculation. However, what can surely be known is that, if we make it this far, it will be an envious time to live in, and that our journey as a species will have only just begun.

11.11 Total Automation

It is possible that AI natives may come about before total automation of the global economy. It is also highly likely that only a few countries will fully embrace advanced automation and make it through the transition to a post-automated society. Eventually, however, there should be a tendency towards total automation where there is any tendency to automate at all. Since we already have a partially automated economy, it makes sense to predict that we will progress towards total automation after strong artificial intelligence is available.

The conditions for total automation are paramount to the conclusion of the post-automated era, as it means we will have achieved the conditions for the complete liberation of sentient life from the economic systems of the past. This will be a moral victory that ends the wholesale extraction of suffering from sentient beings, both human and non-human alike. That is, notwithstanding the ethical considerations of sentient automated workers.

If and when we achieve total automation, we will well and truly have realized a post-automated age. This should be seen as a closing of the chapter on the darkest eras of humanity, allowing us to technologically, artistically, and morally transcend our natural limitations. This is the sustaining force behind any movement for such change or progress in the human condition and can not be expected to come about without it. Thus, for those who uphold transhuman or posthuman values, such an era is not only ideal, but necessary.

Though, to get this far, we will have to endure the transition. The most important first step we can take will be to turn our focus away from local security and AI safety concerns, and prepare, on a global level, for the arrival of strong artificial intelligence.