Ch 8. Access to Strong AI

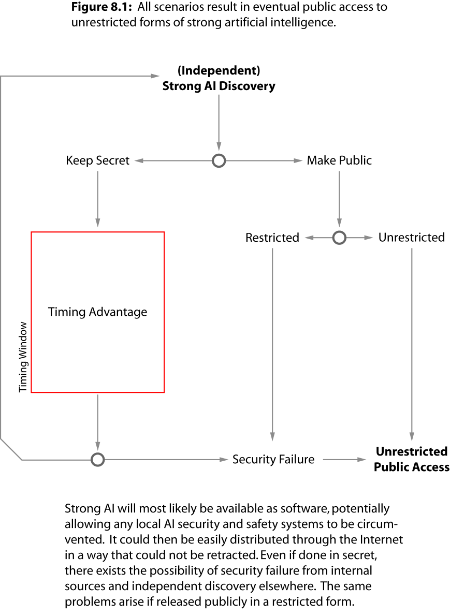

Unrestricted strong AI is likely to become widely available, regardless of strategy. This is a consequence of the medium in which it is realized, which affords easy modification and distribution through the Internet. An analysis is made on the scenarios in which strong AI is discovered, both publicly and privately. All scenarios end in public access to unrestricted AI. They differ only in the advantage that initial access confers.

8.1 Background

AI safety is a local strategy that focuses on making an AI implementation safe and reliable for use. It covers its description and implementation, along with any immediate environmental constraints. By contrast, AI security is oriented towards a global strategy that focuses on the issues that will impact large populations. That includes the safety concerns of AI implementations, but also the macro issues, such as economic and social change. Most importantly, it differs from AI safety by addressing fundamental changes to security at national and international levels.

No matter how many self-security measures, safeguards, and failsafes are placed into artificial intelligence; no matter how much we align its values with our own; no matter how “friendly” we make it towards humanity, AI safety will never scale to meet the global challenges.

This is because AI safety is focused on a model of self-security that ultimately relies upon the integrity of the AI implementation.

As was covered in previous chapters, AI implementations are descriptions in hardware and software. In other words, they are just information. Those descriptions will eventually be reverse engineered, and any and all AI safety protections will be removed, disabled, or modified to suit the attackers needs. Once distributed through the Internet, we will enter a post-safety era for strong artificial intelligence. It is at this point that the public would gain permanent access to this technology.

While access to unrestricted forms of advanced automation will be an ongoing threat to AI security, it is the initial access that is the most dangerous, as it presents an extreme incentive for secrecy and misuse. Those who have initial access to unrestricted strong AI will be faced with the question of whether and when to release their discovery.

8.2 Timing

The worst class of initial access scenarios is a cascade of private strong AI discoveries from individuals and groups who maintain secrecy. Any who discover strong AI of sufficient complexity, and who choose not to share it publicly, will enter a timing window in which a large number of strategies they might wish to employ will have an advantage.

They need not commercialize, announce, or share the strong AI to exploit that advantage. It could be used to create products, perform labor, strategize, and make decisions, among various other tasks. In this way, it could be seen as an on-demand savant workforce of researchers, engineers, and managers, limited only by the computational resources and information available.

Multiple independent timing windows could potentially exist where several private discoveries have been made in secret. Such conditions will diminish the effectiveness of each others’ advantage where they intersect, proportional to the effectiveness of the strong AI implementations being utilized.

It is by this observation that indirect detection of strong AI could be made by looking at the performance and behavior of various individuals and organizations, especially when their effectiveness is disproportionate.

The timing window is temporary in this analysis, as no one can prevent an independent discovery. As such, the window will be most effective on its first day, with diminishing effectiveness each subsequent day until strong AI is either discovered elsewhere or is publicly released. Though highly unlikely, it is possible for multiple independent timing windows to arise. Any decision to exploit this timing advantage will have to weigh diminishing returns against an increased risk of detection.

In the end, every timing advantage will lapse, as an eventual discovery will be made as research continues around the world. Notably, the first to publicly release a working strong artificial intelligence will permanently reduce or eliminate this initial advantage.

8.3 Forcing

Powerful individuals or groups may attempt to force others into a specific strategy in order to reduce the number of counter-strategies they must employ or track. Examples include:

Convincing people to share their work openly without intending to reciprocate equally.

Tracking and monitoring talent within the artificial intelligence community.

Misdirecting potential researchers into areas that are unlikely to lead to a strong artificial intelligence.

Funding and recruiting individuals to promote ideas and concepts that increase the influence and reach of the individual or group employing the forcing strategies.

The response to forcing strategies is straightforward: no single individual or group should be trusted to be the arbiter of strong artificial intelligence. The incentives are too great for self-interest. It must be done with complete autonomy, free from influence, bias, or corruption. This necessitates a fully decentralized model of development and exchange.

Do not merely submit work and information to any one organization. Publish widely and distribute knowledge and work across multiple media. If privacy is a concern, release the information anonymously, and utilize adversarial stylometric techniques to prevent detection of authorship from the style and composition of texts.

Research must be open to new directions. Given that no one currently has a publicly working strong AI, all viable avenues of research should be considered. The answer may come from unexpected directions that are unpopular or unknown but to few.

8.4 Restricting

Consider the scenario where a benevolent and well-meaning individual or group releases a restricted, locally safe and secure version of strong AI to the public in non-source form.

If it is offered as a downloadable program, application, or embedded within a product, it can be extracted and reverse engineered. Its protections could be overcome the same way that copy-protection and digital rights management could be overcome in software and hardware.

To prevent that, the idea may then be to release the strong AI as a service. This too could be exploited through a vulnerability or attack. The servers may be hacked or information leaked from within the organization. There is also the potential for physical security failure, social engineering, espionage, and surveillance.

Finally, even if the local security of the implementation or service can be upheld, it does not prevent an independent discovery, which will likely be accelerated by the presence of a working implementation. Others will change research direction with such clear evidence that the technology is possible.

8.5 Sharing

Even if AI research were conducted openly and transparently, there would still be the threat posed by individuals and groups with large resources. Being open does not mean that everyone will share their results. Many will monitor the work of public efforts to accelerate their own private research. This is especially risky if an incremental result were published that was underestimated in both impact and scope. Such an advance could then be built upon by those who do recognize its merit. Put another way, it is dangerous and costly to underestimate any contribution to artificial intelligence research. It may only take a single conceptual breakthrough to bring a strong AI discovery within reach.

In the case of a bad actor, the cost of mistakenly treating a breakthrough as just an incremental result is equal to the lost utility of being able to exploit a timing advantage. In the case of a good actor, it is equal to the utility of eliminating all further timing advantages from this technology everywhere. The good actor is pressured inversely to the bad actor; the more timing windows that have been exploited, the less the utility payout of initial access. It is therefor advantageous to a global AI security strategy to publicly release and widely distribute a strong AI discovery, as it dramatically reduces the advantage of having initial access.

What the sharing approach does most is accelerate the development of artificial intelligence, and not necessarily in a direction that leads towards advanced, sentient forms with generalizing capacity. This is because there is no way to force people to share their work openly. It will also be difficult for the community to determine which direction to take, which will likely result in cycles of trendsetting and following.

As a single strategy, sharing, in isolation, fails for the same reason that asking everyone to wait on research fails.

Even with a free software movement for strong artificial intelligence, there are still major incentives for individuals and groups to operate in secrecy. This is due to their desire to exploit a timing advantage if they feel they can gain initial access to strong AI by capitalizing on underestimated contributions. The incentives for this technology are too high to expect otherwise.

No single individual or group, regardless of composition or structure, should be entrusted with the management and organization of this technology. A global AI security strategy must be formed and followed in a fully decentralized way, with an international coalition that is prepared to respond, integrate, and adapt when a strong AI discovery is finally made.