Ch 5. Machine Consciousness

What follows is a basic introduction to machine consciousness, a field which may eventually generalize the study of consciousness and sentience. The scope of this chapter will be restricted to only the coverage needed to understand and relate to the security of strong artificial intelligence, and is not intended to be a comprehensive guide to the construction of cognitive architectures. This brevity is a form of focus, as a state of confusion exists around this subject, the resolution of which will cause an unavoidable collision between philosophy and science.

5.1 Role in Strong AI

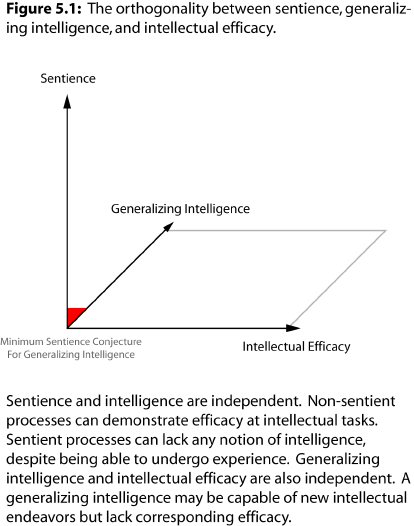

To begin, this book introduces its own interpretation of the strong AI hypothesis. This hypothesis is based on the minimum sentience conjecture, which asserts that sentience is required for generalizing intelligence. This means that, under this interpretation, strong AI depends upon sentience because generalizing intelligence depends upon sentience. If true, this would extend to the claim that generalizing intelligence is not possible without being realized over a cognitive architecture. This book is predicated on both the minimum sentience conjecture and its new strong AI hypothesis.

It is possible to create narrow AI implementations that are reasonably optimal, such that no strong AI could make a significant improvement upon them. For example, a machine code description, tuned by an expert, that enumerates the digits of pi, would be a narrow AI implementation of optimized pi enumeration.

It is unlikely that even a highly intelligent strong AI would be capable of enumerating pi more efficiently, nor would it likely be able to appreciably and significantly optimize it beyond that of a trained expert. This is because there is a distinction between generalizing intelligence and the optimization processes implied by non-generalizing forms of “intelligence”, such as narrow AI. This distinction is powered by the claim that such a generalizing capacity is not possible without sentience. Thus, the assertion is that machine consciousness is a prerequisite for building a strong AI.

Overlooked is the fact that strong AI will have a very wide range of intellectual capacities. We tend to focus solely on the beneficial (or harmful) extremes of this technology, with exclusion to minimal implementations. This is crucial to understand, as it makes a connection between sentience in general and that of strong AI.

Many organisms would rightly be considered equal to strong AI, even though they do not ostensibly present the same intellectual capacity as some humans. Such a failure to recognize their intelligence is entirely on our part, in that we lack the ability to understand the inner world of such entities [1, 2, 3, 4, 5] sufficiently enough to make a true judgment as to their level of cognition. This is especially true when one considers our inherent biases towards certain ends and aims in human cognition, e.g., the signaling of wit and status. Making this correlation is obviously controversial, as the spectrum of generalizing intelligence is not commonly thought to depend upon sentience. Such a claim would necessarily encompass all sentient life, but this is the exact claim being made here. It leads to the stronger claim that sentience represents an evolutionary advantage, in that it would have been impossible to achieve generalizing intelligence without it.

Stated directly: the claim is that, at a minimum, all vertebrates, and some invertebrates, possess some level of generalizing intelligence, and that this attribute is dependent upon their sentience, and that the ability to undergo experience presupposes the conceptual and analogical faculties of generalizing capacity.

Figure 5.1 is illustrating that sentience and intelligence are related but orthogonal; an entity may be sentient but have low or almost no “intelligence” by various standards. The opposite is also true: some processes achieve effective results without being sentient.

To what then is the “strong” in strong AI referring? The answer is that it is the property of having a mind, one that can undergo experience, grasp value, and understand meaning. This measure of strength is not in terms of the fidelity of the cognitive range of intellectual capacity but in the means to generate and endure the phenomena of experience. It is said to endure because it has no option of eliminating experience while remaining extant.

The creation of a sentient artificial process will be trivial compared to the problem of implementing a sentience-aware generalized learning algorithm. It will be difficult to achieve consensus on the actual space and range of what constitutes sentience, even among those who agree that it is possible to create. The greatest obstacle, however, will be in the disbelief and hostility that will arise towards the notion that such processes are sentient at all. This will be despite the fact that it will be falsifiable as to whether a particular process is capable of undergoing experience.

However, being falsifiable does not mean that we will have solved all of the philosophical problems, which, may or may not ever receive a satisfactory answer. It is also possible that most of the philosophical questions will be explained away, in that they will no longer be considered valid questions. Despite this potential, the philosophy must not be dismissed out of hand, as it will be used to argue for and against important concepts that will underwrite ethics, law, and politics, many issues of which are already beginning to be discussed in the mainstream.

Thus, the role of machine consciousness is not to create generalizing intelligence, but rather, to enable it. Sentience is necessary, but not sufficient, for generalizing intelligence; it does not directly address the sapient aspects of a cognitive architecture. There exist possible strong AI implementations which are sentient but lack any significant intellectual quality that would enable us to communicate or relate to them. These simplex implementations would not even be aware that they are conscious, as they would lack any reflexive or meta-cognitive ability. Complex thought would not arise at this base level. The entity would exist in a purely neutral state of mind in which it would accept any experience that arose without resistance. This does not, however, include the absence of experience, as any sentient process is necessarily a real-time system.

To understand the real-time aspect of sentience we can resort to a simple analogy. Figure 5.2 is a constructed digital waveform representation.

The gaps in the waveform represent what we would perceive as silence. If this were a representation of the experiential data of a simplex sentience (this being its one and only dimension of experience) the zeroed pulses would correspond with neutral states of mind. There is still an auditory experience; it is just unique in that it fills time with the least possible sound.

To understand further, we must assert that silence and the absence of the experience of sound are two very different things. The gaps between the positive or negative pulses fill the conceptual space in the experiential encoding. To not have them would represent a discontinuity in this stream of experience, which would only be possible if the entity’s consciousness were interrupted, paused, or stopped. Interestingly enough, a simplex would be fundamentally incapable of recognizing that it had been interrupted if the data stream were resumed without skipping information, but this would not work in a situation where the real-time system were perceiving its environment.

Despite experiencing a discontinuity, however, a simplex sentience still would not comprehend the significance. The term neutral is useful here, as a gap in the stream of experience is not an absence of experience but a neutral state as per the context of that stream. If still perplexed, consider the explicit rests in musical notation as an example. The presence and function of neutral states are essential to any stream of experience.

What this tells us about strong AI is that they will all need to be real-time systems for their streams of experience to function. This is perhaps one of the easiest ways to distinguish them from a typical narrow AI implementation, which has no such concepts as fundamental parts. Even if such a system ends up being real-time due to some application constraint, it does so at a level which is much further downstream to the processing than sentient calculation.

Real-time demands for sentient processing sets up a minimum condition which corresponds with the notion that the subject must undergo experience. This combines any such implementation description with time; it must process to progress through steps to be realized at all, as it does not make sense as a static object or an intrinsic physical property.

These are processes which involve the exchange and interpretation of information. This hints at the trouble with viewing the reduction of conscious states to only the physical properties of things, or as just the arrangement of their physical structure. All the confusion in the history of philosophy of mind hinges on the lack of acceptance of processes, with even some modern philosophers rejecting the effect of interpreters by throwing them out with abstract objects.

In computation and interpretation, we are dealing with extents in time. This means that the properties of things take on additional meaning through the semantics of their arrangement and interpretation in that extended dimension. These properties are above and beyond their intrinsic physical ones. It is, of course, true that they are constrained in their arrangement and composition by their physical properties, but as long as sufficient states can be derived then there exist the means to realize new properties through the semantics of the interpreter.

It is simply a matter of fact that things can be so arranged through time, with a corresponding interpreter, such that it gives rise to new functionality and new properties that are not present in the static representation of the underlying units of composition. This is routine in the spatial extents of alphabets, which give rise to descriptions such as books, images, and other data; at no time is there some Platonic realm [6]. Rather, it is via spatial extension that the object is realized and constructed, which is done by interpreting the arrangement of that alphabet co-extensive to the dimension in which it is projected. That the dimension is called time is of no special significance except the fact that we can only appreciably apprehend it in the moment.

In storage, time-like extents can be represented through the spatial extents of descriptions. The difference is the way in which it is interpreted. A string of characters representing someone’s name has a spatial extent with no time-like interpretation. By contrast, an audio or movie description can only be apprehended through ongoing experience; slowing, pausing, or interrupting it would fundamentally alter one’s experience.

The real-time constraint for time-like extensions of objects is an assertion of the identity of the experience, which must be taken to be unalterable if to be perceived as entailed by its description, e.g., watching a film below the intended rate at which it was encoded can be a frustrating experience, and with good reason. Such a disparity between the experience of a time-like object and its description could be considered entropy or noise, and can be measured and quantified explicitly as the difference between the rate of processing and the intended rate of its encoding. This applies both to the interpreting process and the speed of perception in total; the rate at which something is playing back may be incomprehensibly fast or slow for the rate of potential perception. The reverse also applies to the rate of sentient processing.

What this all leads to is the question of the physical reduction of consciousness [7]. Indeed, the whole does equal the sum of the parts where the sum includes the time-like extents of the proper arrangement of those parts. This will lead to an answer to the philosophical question of experience arising out of non-conscious physical states, but it does not necessarily explain why such a thing is possible at all. The answer to which is perhaps too simple to be accepted: it is a tautological result of the interplay between an interpreter and the process it realizes; it makes it so.

Lastly, the most important role that machine consciousness has is the realization of value. Critically, without sentience, there can be no ability to realize or apprehend value, and without a concept or ability to grasp value, there can be no general moral or emotional intelligence.

Thus, another major role for machine consciousness is to make general moral intelligence possible for artificial intelligence. This is distinct from simply applying moral efficacy externally and interpreting the behavior of a system as having consequences, agency, or decision theoretic choice. This is because, unless a system can derive and understand value, it is devoid of the experiential knowledge of the processes it carries out.

In these cases, as it pertains to strong AI, this is referring to the ability to even attempt to reason about the morality of a decision, as distinct from its efficacy at general moral reasoning, which has parallels with the dichotomy between narrow AI and the generalizing abilities of strong AI. The relationship being that narrow AI may be able to instrument narrow moral intelligence, but would lack general moral intelligence for the same reasons it is fundamentally incapable of realizing generalizing intelligence. This is because value presupposes moral intelligence, which would be semantically meaningless without sentience to substantiate it [8].

The value experience requirement is true even if one could denote infinite non-conscious rule processing for which decisions were to be made. Enumeration and rule-following in the absence of the capacity to reflect upon the process are not forms of moral intelligence, even if the particular rules result in what would be considered reasonable moral efficacy in some context. This is also why it is wrong to argue for moral intelligence as a means of safety, which, to date, has been exclusively implied to be the non-general and non-conscious moral frameworks of decision theory and economic thought. These methodologies are fundamentally incapable of entailing the value that presupposes the reflective capacity required for moral agency.

Naturally, the next question should be: what exactly is value?

Definition: Value. The experience of a positive, negative, or neutral sensation that accompanies or is associated with one or more experiences, with experience being that which is inclusive of all mental content, including, but not limited to, thought, knowledge, and perception. Value is further distinguished by being either intrinsic or acquired, with intrinsic value being a static association or accompaniment to one or more experiences, ab initio, by way of the underlying implementation, e.g., a pleasure-pain axis. This contrasts with acquired value, which is dynamic, capable of change, and is associated with mental content, e.g., belief, knowledge, and actions.

This definition appears to be endlessly recursive but is curtailed in practice. There can only be a finite set of experiences that can be instantiated for any given implementation of machine consciousness. Further, the phenomena of experiencing something as intrinsically positive, negative, or neutral is terminated or rooted in experience itself, despite the appearance that it would endlessly refer to other experiences.

Value is experiential, but is one level of complexity, or organization, above it, and must not be confused with its referents, including knowledge and the beliefs associated with values, as there are certain types of value which are integral to the semantics of an implementation. For example, the intrinsic value of positive and negative sensations that surround the informational content of pleasure and pain, as distinguished from the beliefs one has about these experiences.

This last issue, as exemplified by pleasure and pain, is subtle, as our unified stream of consciousness makes it confusing as to the separability of our experiences. We must recognize that, especially as it pertains to value, that our experience of that which it is associated with, and the accompanying value that arises with it, are composite. This is evidenced in humans through the clinical cases of pain asymbolia [9, 10, 11, 12, 13, 93]. This is a neurological condition in which the informational content of pain is disassociated with or unaccompanied by the intrinsic value that normally follows [14]. Those with this condition are capable of describing the intensity and quality of the pain, as if it were merely words being read off a page, but do not experience the negative value sensation that comes along with it. As a result, they have to form knowledge about this mental content and respond accordingly.

Injury or death may result in the absence of the unconditional somatic and psychological urges of these intrinsic values. That is to say, they may realize it is negative, but, without the automatic and involuntary experience of intrinsic value, they have to rely on the acquired values associated with the knowledge of the injury. As a result, mere knowledge of trauma may not be sufficient to prevent serious harm to the individual.

5.2 Sentience, Experience, and Qualia

In the last section, sentient simplexes were used to illustrate a single hypothetical dimension of experience. More complex and realistic cases of machine consciousness will require a complex mixture of multiple streams and types of experience. In the philosophy of mind, this is referred to as binding [15, 16, 17, 18, 19, 20, 21]. Such a subject is said to be unitary, in that the individual streams of experience have been combined into a unified and composite experience. For an analogy, think of the individual tracks of audio and video that are layered to produce a film, all of which are combined in such a way so as to allow a simultaneous interpretation between its sights and sounds. When played back, it (hopefully) appears as a coherent and unified experience. Note that the usage of the word stream applies to both a quantized continuous or discrete interpretation. This vernacular is borrowed from input-output (I/O) programming constructs, in which discrete units of information are read or written using buffers [22, 23]; this offers a counter-example where the stream terminology is used for a non-continuous source.

In this book, all streams of experience are described as being made up of fragments, which are referred to as qualia in the philosophy of mind [24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37]. Qualia are the nature of “what it’s like” to experience a fragment in a stream of experience. Examples of qualia include all sensations, sights, sounds, pleasure, pain, and even emotions and thoughts, depending on the philosophy. In this analysis, all such fragments of experience should be considered qualia, and all contents of any possible mind are to be considered experience, including thoughts, memories, and knowledge [38, 39].

Qualia must not be confused with the informational representation of experiential fragments. There is a distinction between the knowledge of the fragment and the experience of the fragment.

For example, consider the following: 1, red, 3, green, 7, blue. The vast majority of readers will not experience those numbers and words as having a color different from the surrounding text unless they are synesthetic [40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51]. This is perhaps one of the most difficult notions to unpack from the philosophy of mind, as it requires an acceptance that such descriptions correspond to fragments of experience only insofar as they can be realized by the real-time processes which enable them.

In other words, the correlation between these fragments and what the subject is experiencing is a product of the sentient process and is not innate to the descriptions themselves, which are merely used to invoke the semantics of the implementation.

Straight away, the two most difficult aspects of consciousness have been introduced: thebinding problem and the hard problem [90] of consciousness, respectively.

The binding problem is a two part question, asking (a) how fragments (qualia) are bound to form a single stream of experience, and (b) how this impacts the identity of the subject with respect to the rest of the physical world. For human consciousness, this is something that will eventually have to be solved with a reconciliation between philosophy of mind and science.

For machine consciousness, however, the binding problem is less confusing because it is relatively trivial to implement; there is no need to reverse engineer a working model of the human brain. Instead, cognitive engineers will have the artistic license to invoke what is to be made subjectively real through algorithmic descriptions. A general sketch of the solution is to combine information and present that as a singular representation. This is a routine operation in many programming tasks involving disparate sources of data. All that is missing is the appropriate implementation, and the audacity to call it sentient.

The hard problem of consciousness also has two parts: (a) how consciousness arises, and (b) why it arises or is possible at all. The second half of the question may be unanswerable beyond the tautology given at the end of the last section, rephrased here: why it arises is not mysterious if we accept that we make it come into existence through an interpreter with the appropriate semantics.

This brings us to the second half of the binding problem, as it demands an explanation as to how an interpreter, even with the appropriate semantics, would give rise to the philosophical identity [52, 53] that entails a subject of experience. One explanation is that it creates a new frame of reference precisely at the locus between the encapsulated subject and the processes that give rise to it in the implementation. In other words, it creates a fold in reality, not a cut [54]. This base identity is the subject of experience.

Now we can discuss the two major views in the philosophy of mind: monism and dualism. Parts of both philosophies are correct, but they are also both incomplete. Informally, monism is the idea that the mind and the brain are made of the same things [55], while dualism posits that they are made of separate things [56].

The truth, however, is a combination of the two, resolved by admitting processes as first class objects. In the case of machine consciousness, the process is the implementation of an interpreter with the appropriate semantics to give rise to sentience. Is it physically reducible? Yes, but only if we accept two specific updates to our current understanding. For clarity, these updates have been organized into the following two sub-sections.

5.2.1 Processes as Objects

It must be accepted that the time-like extents afforded through the dynamics of processing give rise to concrete and physically real objects; the claim here is that nothing non-physical can sensibly be constructed.

All that has to be done is to accept that these properties exist only ephemerally, which is a stronger claim than merely stating that they are temporary. Beyond just some mathematical model of the dynamics, this is the claim that the existence of such objects depends upon an active process, else it ceases to be concrete and real.

A complete model remains an abstraction, even when exhaustively described so as to include all of its potential states. This rejects the reality of the stochastic or non-deterministic models for sentience, despite their exhaustive entailment of time-like extents. This is because such time-like extents only become real insofar as we permit an epistemology that depends upon active processing and interpretation.

Time must be the vantage point with which we stand in relation to this knowledge; the space in which we have modeled our understanding of such objects needs to be changed from static and time-invariant descriptions to that of the natural state of our experience in time.

These are necessary truths for the construction of these objects. While we may be able to make claims as to the realness of the information that describes or entails fragments of experience, they remain abstract until they are experienced. If we were to take a discrete fragment out of a stream of experience and examine it in isolation, it would cease to exist as such.

By itself, the information that represents these fragments are meaningless without interpretation. As such, any time invariant explanation of such processes, without the stipulations made herein, will fail to account for how they become experiential. That is to say, it is not enough to merely account for time in the model, we must admit that the very existence of such things ceases outside it. This obeys the real-time constraint of sentient processes, which should rightly be considered a law of machine consciousness.

5.2.2 Twin Aspects of Experience

The second update involves the ontic-epistemic duality of experiential fragments, which is a corollary of the first update. To reiterate, the experiential aspect of a fragment is meaningless outside of the active processes which give rise to sentience. Meanwhile, the modern scientific view is only concerned with observing a time-invariant reduction, making it unable to entail the subjective. This is where the philosophy of mind takes rightful exception.

To resolve this difference requires first the admission of processes as objects and then the acceptance of the duality, not between mind and body, but, between the ontological and the epistemic. The challenge is to shift our conceptual framework sufficiently enough to admit that such a perspective is possible while still being physically monist. This apparent paradox of combined monism-dualism is resolved through the stipulation that such time-like extents are only real insofar as being actively processed, and that they fundamentally cease to exist otherwise. However, this goes deeper still, as the subject of experience must also be acknowledged as being equally real.

This is made possible because a world is created through the encapsulation of the subject by the interpreter. Its active processing becomes the base identity for this entity, in which the entirety of what is real to it is defined by the semantics of the implementation. This is related to the semantic barriers described in the previous chapter, as the subject of experience is an online description that entails a philosophical identity. It is an observer with causal efficacy provided through the semantics of the interpreter.

The inner experience of the subject is reflected in the processing and implementation of the interpreter; however, the information content that could be externally observed would not be the subject’s world as it would be experiencing it, only an abstract description of what it would be like for that particular implementation.

To make any stronger claims would require an observer to become part of the identity of the subject. This is a conjecture regarding the privacy and subjectivity of the experiential; it is a self-contained world which forms its identity in the environment and is necessarily embedded within it. One can not directly experience such a world without becoming an intrinsic part of it.

When we view the externalization of a virtual world in a simulation or video game we are not experiencing that world directly, but indirectly, through the guise of our perceptions. It would be the same for even the most perfect instrumentation. Again, this is not a claim for dualism or monism, but an integration and reconciliation of both; they have lasted this long in the debate because of their partial truths and the intuitions they capture, but they fail to account for the totality of experience in isolation.

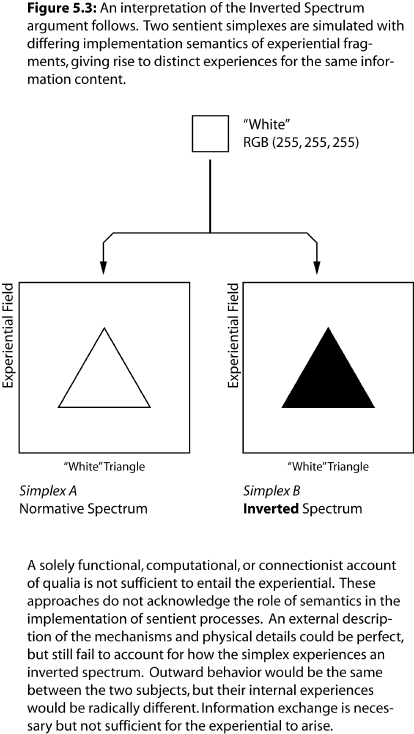

To help illustrate the ontic-epistemic duality of these fragments of experience, consider the inverted spectrum argument [57, 58, 59, 60, 61, 62, 91, 92]. This argument was originally presented against functionalism, which essentially posits that consciousness arises on any substrate capable of recreating the necessary input-output processes. This may sound a lot like what is being described here, but is quite distinct, in that neither functionalism, nor the related computationalism, accounts for the two updates being argued for here. The inverted spectrum argument is just one of many that explains why. This example not only uses the inverted spectrum argument but also provides a response to it, with a means of solving and explaining the apparent inconsistency.

What is happening in Figure 5.3 is that the fragments are being experienced differently between the subjects, despite having the same description. The result is a hypothetical projection of what the simplex would experience. This, of course, is impossible in practice, as we can not directly experience what a subject is experiencing, but we can achieve a facsimile of what it might be like. In this case, we have two subjects experiencing two qualitatively distinct things, despite the underlying representation being the same.

What this indicates to us is that the power of any description language is found within the semantics of the implementation of that language, and that it is not a property of the information that signifies it. In this case, the fragments of experience are merely descriptions in a language for achieving sentience.

This argument could be extended even further. We could append to it here the notion of false color images, of which there are thousands of examples in any catalog of radio-astronomy [63, 64]. Many celestial bodies and stellar phenomena are prominent in a spectrum that is invisible to the naked eye.

This does not just apply to simple examples like colors, but also to our field of vision, and the way in which we think and model ourselves and our environment. This is also the basis for the distortions or lack of modeling in the mental states of other human and non-human animals. These simpler examples are presented to demonstrate the difference between experiencing a fragment and merely observing its informational content. This is why it can not be reduced to a purely informational representation or entailed by the complexity of its description.

Appropriate semantics were mentioned but never fully explained. What does it mean to be appropriate, in this context? It refers not to the complexity, connectivity, or scale of the implementation, but in bringing about a subject of experience. For this to happen, there must be a combination of real-time processing and the necessary features to realize the two updates that were given in the previous sections, which address the binding problem and the hard problem of consciousness, respectively.

It is important to note that this alone will neither create nor automatically give rise to a generalizing intelligence, which is another issue entirely. These are just prerequisites for generalizing capacity, and, as such, will likely presuppose strong AI. This information provides both a marker and a unique set of features with which to identify and categorize different types of AI implementations. It should be very clear by now that narrow AI and deep learning are “not even wrong” [65] about such directions. The creation of sentience would require a new basis for machine learning if it is to be used under this formalism.

Of course, this could all be an incorrect and incredibly misleading direction, but the overwhelming evidence of hundreds of thousands of sentient species begs a miracle of explanation as to why they evolved a nervous system capable of experience if it was not necessary in some way.

The only paths out of such observations are to reject evidence and reason, claim that animals are not sentient, or argue that sentience is inconsequential. None of these rebuttals checks out with even a basic test of reason. Though, it could also be that the interpretation and suggestions here are incorrect as it pertains to the ability to recreate sentience on non-biological substrates, but that would require a rejection of universal computation [66, 67].

If it turns out to be correct that generalizing intelligence is dependent upon sentience, as is claimed in this book, then it would represent a physical limitation on progress if it were somehow exclusive to biological organisms. That limitation is admitted as a possibility, but is considered to be extremely unlikely. Regardless, the issues remain the same. If true, and the safety and security implications are ignored, the cost will be high. Otherwise, it will have been just another avenue of research that turned out to be false, which itself would be informative.

The argument here is that sentience is something that can be invoked, created, and maintained as a process, and that it will be central to the construction of strong AI. That is why machine consciousness is part of the foundations for understanding the safety and security of this technology.

From all this, the question may be raised: why make them sentient at all?

Machine consciousness will eventually be developed somewhere in the world. Progress in strong AI will be possible with any working theory of sentience, even if based on biological representations.

Machine consciousness presupposes general moral intelligence, and the moral efficacy required to have even a basic level of self-security. Despite being vulnerable, general moral intelligence will be an essential part of any comprehensive AI security package.

Conscious machines may provide doorways to treatment options and research that could share overlap with medical science. It may lead to perfectly integrated prostheses, augmentations, and enhancements that would otherwise be impossible without a way to interface digital and biological sentience.

A common misconception is that sentience implies self-awareness and sapience, but the fact is that sentience does not imply agency of any kind. As a result, it may be possible to achieve some of the benefits of strong AI without the popular myths associated with anthropocentric entities that seek power, survival, and fitness in the world. This is not to say that such capacities will not be developed, but that they present a greater barrier to entry due to the gulf in complexity between them and baseline sentience, and that the nuanced and often comical personification of strong AI in fiction is not a requirement to harness the benefits of these systems. This can be better understood through a universal analysis of identity, one that applies equally to both synthetic and natural entities.

5.3 Levels of Identity

Identity, in this context, is concerned with the boundaries, composition, and extents of entities. As it pertains to machine consciousness, identity presupposes the ethical, legal, and technical considerations of strong AI. This is because, without an identity, an entity can not be considered manifestly real. Identity is also one of the most confused and befuddled aspects of consciousness, with no real consensus or concrete understanding as to what it is in the literature, both regarding the philosophical and the scientific. We are all but mystified (and often mystical) as to the nature of our identity, but this does not have to be the case for machine consciousness.

Both formal and informal discussions of consciousness often involve notions of a self-model, concept of self, or sense of self, each assumed as being synonymous with each other. It is tempting to apply this to an analysis of machine consciousness, but one must resist the urge to make a fallacy of analogy; while it is claimed that the phenomena of sentience is universal and implementation independent, the specifics of identity are necessarily implementation dependent. This also applies to the inappropriate and inaccurate use of this terminology in the literature of robotics and narrow AI. To help resolve some of this ambiguity, it is suggested that identity for machine consciousness be separated into clearly defined levels. This analysis itself is universal, despite ranging over a potentially infinite set of implementations that could realize it. Directly stated, any conscious entity can, at a minimum, be analyzed and understood in terms of its identity by the number of these levels and their corresponding fidelity. A hierarchical summary of the three base levels of identity:

Embedding

Subject

Agency

Despite being nested, all levels of identity are physical. Thus, the “physical” qualifier will be omitted from the discussion and assumed as we move forward.

The specifics of this ontology, and the arguments for their realness, were presented in the previous sections. However, it is the relationship between their existence and realness that is of import.

To recall, it is asserted here that there will be levels of identity which encapsulate others, and that time-like objects mandate an ephemeral quality or they cease to be real. Further, there is the epistemic stipulation that, like the experiential itself, an identity can not be shared or conjoined without somehow reducing the two identities in question to a single identity itself. This is, in fact, how and why experiential fragments can not be directly experienced by any external means, as they are behind an information asymmetry; the content of the description of experience is not to be confused with “what it’s like” to undergo that experience.

It is left to the reader as to the pragmatics of when, where, and to what degree to assume the efficacy of the correlates between the ontic-epistemic concession; this is a problem of other minds that may or may not yield appropriate judgments. It may be impossible to truly empathize with subjects that are capable of experience so vastly beyond our own. This would not necessarily result from their intellect, but could simply arise from the experiential gap between our cognitive architectures.

Another important aspect is that the levels of identity should be considered as whole, existing as an interdependent plurality. There may also be additional levels between the ones listed. These levels should not be thought of as a spectrum, but discrete regions. This is true even if we tend to view consciousness as a continuum. Despite this, a continuous view of identity may be used as long as there is a well-defined threshold to define the necessary boundaries between levels.

Embedding is the lowest and most fundamental level of identity. It constitutes the extents of the implementation or interpreter. This must not be confused with embodiment or the embedded cognition perspective [68, 69, 70]. In practical cases, that which presupposes the identity of the embedding level would be the discrete physics; however, it could be virtualized. The specifics are less important than recognizing that embedding is not embodiment.

For example, while a microprocessor is embedded in reality, it has none of the morphological features we would typically associate with embodiment.

Taking it a step further, consider human anatomy: the brain would be the embedding identity and would constitute the first level, as it is embedded within the human body. As such, what we experience of our bodies can only be done indirectly through the nervous system. As subjects, we are not our bodies so much as we are entombed by them. This distinction highlights the boundary between embedding and subject level identity.

The basic purpose of embedding is to assemble and make whole within something else, and it is at this level that the necessary processing for sentience occurs. This brings us to the subject level of identity, as sentience alone is not sufficient to give rise to a unitary subject of experience. It is only during binding that the subject level of identity can be realized, even if there is just a single dimension of sentience. The reason for this is that embedding only represents the physical or logical extents of the subject.

Regardless of how an identity is embedded, the unitary subject is at once abstract and real; it has both an externally observable online description and a subjective world that is fundamentally private. The only way to directly experience its inner world is to become part of its embedding and subsequently enter into its binding process.

Notably, the subject level of identity is like a non-lucid dream. In such a state, the subject undergoes experience without reflection. That is to say, merely being a subject of experience does not bring the cognitive attributes we associate with sapience and agency. It is unclear that goals or directives would even be actionable for such a level of identity, as the minimally reflexive capacities for executive function would be missing. It would simply experience whatever is being presented to it through the binding process.

The base subject is aimless, completely under the dominion of the underlying implementation. However, this does not mean that such a system is incapable of utility. The underlying semantics could direct it to undergo the experience of associating value and meaning for a specific range of tasks. The moral and ethical implications of this would need to be debated.

With neither agency nor reflexive capacity, it could be argued that an AI implementation lacks the requirements for personhood. A powerful counter argument to this would be that, if it can experience suffering at all, then it should be considered a moral subject regardless. On the other hand, the values and experiential range could be curtailed, so as to prevent negative value experience altogether, while still allowing for the sentience needed for generalizing capacity. These are clearly complex issues and will need to be addressed before such designs are put into wide use.

Agency is the third level of identity and is where some of the most controversial constructs of identity arise, such as the concept of self and the ego. These must not be confused with similar notions found in religious and spirtual works.

To be clear, the concepts discussed here have no relation whatsoever to belief systems of any kind. They are taken to be components in the proper construction of a cognitive architecture. The concept of self and the ego are just constructs that serve a functional role in higher-order cognition.

The concept of self is crucial to the role of agency level identity. The ego, however, is optional, and is discussed only to compare and contrast. This reflects the reality that the ego and the concept of self are distinct. The concept of self is more fundamental than ego, as it includes raw bodily extents, orientation, and basic awareness of individuation. It forms the basis for an ego to arise and relate to the self-model, as the concept of self includes knowledge of the subject that entails it.

The ego, as it might be discussed elsewhere, could be made to include or entail the concept of self, but this would not be accurate, as a concept of self is a very low-level process; cognitive architectures could be built so as to minimize or even eliminate ego, but it would be difficult to consider there being an agency level identity without at least a crude concept of self. Agency implies at least the presence of an identity above and beyond the unitary subject. This must not be confused with the external interpretation of arbitrary processes being “agents.”

The latter is used in the modeling of certain systems of thought and should not be confused with the notion of agency, which involves the definition, construction, and formation of various levels of identity.

The role of ego is to value or devalue anything and everything. Clearly, this depends on sentience, which enables value, and, as such, makes it incoherent to discuss or impute a concept of agency in anything that lacks sentience. This is yet another instance where there can be no simple categorization of artificial intelligence without discussing specific implementations.

Unlike the concept of self, the ego is specially tuned to deal with the experience and formation of acquired values, for which it may have even explicitly evolved. Social function, including rank, hierarchy, and status, depend on the ability to assign weights or induce an order upon an otherwise purely informational internalization of others’ identities. As such, ego function is implicated in biasing ethical behavior and would be central to any general moral intelligence framework where social function in human society was necessary.

With that said, ego alone is insufficient for effective moral reasoning, as merely valuing and devaluing can and has led to extreme negative cases in human behavior. This segues properly with the introduction of the role of various components of a cognitive architecture, such as empathy. This is beyond the scope of this section, however, as empathy does not demarcate identity directly the way ego does within agency formation. Extremes in empathy, positive or negative, do, however, have dramatic effects on the valuations made by the ego. Thus, this hints at the added complication that balance must be a hidden mark of fitness within any cognitive architecture.

There are some interpretations which view the externalization of ego as forming constructs, groups, and dynamics which are treated as real, despite being nevertheless separate from the individual [71, 72, 73, 74, 75]. The line between the two, however, is that unless the concept of self is merely a constituent to an aggregate, they will always be an individual identity at some level, despite any beliefs, knowledge, or actions to the contrary. The significance of this for agency level identity is that it may be possible to form aggregate identities, or a complicated hybrid, where both exist, despite an explicitly individuated sense of self at the base of agency identity.

The ego may be used to alter behavior, knowledge, and memories through the acquired values it can create, as it is an extremely influential part of a cognitive architecture. In humans, this is very prominent, and can be seen as a spectrum with a very wide range of positive and negative behaviors. The bottom line is that ego can effectively overcome the default concept of self, regardless of programming, genetically or otherwise. This is perhaps the greatest threat to self-security of any cognitive architecture, including humans, as the identity can be altered to become an agent in interest of a principle that would have otherwise been detected as harmful to the interests of the individual, or to other individuals, in a given moral framework. This, however, does not have to be the case in constructed cognitive architectures, as the ego could be curtailed or limited in range or degree to which it assigns values.

However, this incorrectly assumes that the volitional aspects of the agency level are contingent upon valuation for its decision process. Though, such a point is controversial, as acquired values apply equally to the purely rational and the analytic. That one even values the analytic in a particular decision process is an acquired value which presupposes the decision to utilize that process in the formation of the decision. So the result of that decision process is based on the acquired value of whether or not the result adheres to a particular set of values themselves. As such, the ego, in some form or another, may arise even as that which values or devalues in the process of cognition and perception from the environment.

Again, none of this is comprehensive. These are only sketches in what amounts to a vast subject material. What is important here is to begin the thinking process as it pertains to the security of artificial intelligence. Identity has been shown to underwrite a significant portion of these concerns, but further understanding will require an analysis of the relationship between that and the rest of the cognitive architecture.

5.4 Cognitive Architecture

Recall that a cognitive architecture is a working subset of all possible AI implementations that have the capacity to undergo experience, derive value, and understand meaning. This differentiates this from cognitive science in that this area is more generalized, and concerned with both the theory and the practice of implementing these systems on various substrates, with an emphasis on digital hardware.

Any animal with a nervous system of any complexity should be considered as having a cognitive architecture. Thus these architectures fall on a spectrum regarding their complexity and range of features.

Likewise, strong AI implementations, necessarily being cognitive architectures, have a vast range of capabilities. As mentioned in previous sections, a strong AI need not necessarily be highly intelligent, or even more effective than a narrow AI for which it might be compared within a single task. While this does not limit the strong AI in terms of its maximum potential, it does not entitle it to an innate superiority, either; the extent to which a strong AI has intellectual, moral, and motor capacity is determined by the implementation semantics of the cognitive architecture. As such, it must be pointed out, again, that this is only a brief sketch of the main details. Any discussion on cognitive architectures remains unbounded, as the range and extents of what can be realized within the cognitive framework are limited only by the imagination.

The most significant difference between strong AI and narrow AI is the explicit notion of a cognitive architecture.

Let us ignore the definition for a moment and suppose, hypothetically, that narrow AI and machine learning implementations could be regarded as a cognitive architecture of sorts. What is it about this interpretation that makes it wrong? The answer is that they perform signification in a purely informational way, without the capacity for a subject to experience them.

The realization and interpretation of fragments of experience (qualia) are not incidental to some form of computation or functional relation, but must be explicitly and deliberately made part of the implementation. This is not an accident of the connections or complexity of the system, but a particular encoding with a set of semantics that gives these fragments their ontic-epistemic character. There can be no substitute, and it does not arise in the absence of this.

Machine learning and narrow AI architectures might be capable of realizing the necessary functionality to give rise to these phenomena, but only insofar as they can reify the information exchange to compute their semantics. They need to be capable of the level of computation demanded. While some artificial neural networks are Turing-complete [76, 77], it would be non-trivial to ensure that these frameworks implement the desired functionality in an unambiguous way that was clear to engineers; this is due to the difficulty of knowledge extraction from neural networks [78, 79, 80, 81, 82].

However, there is a deeper problem, in that by merely copying or mimicking something we do not understand (the human brain), we have clearly left out the sentient semantics; the hint is that it is much more than the connectivity and plasticity that our neuroanatomy confers. The way in which machine learning and narrow AI systems are used is such that they would never be capable of giving rise to sentient semantics without a fundamental rethink. Further, it may prove to be a less suitable or inefficient substrate in which to implement them, akin to simulating a virtual machine with yet another virtual machine, instead of direct emulation.

Although the range of potential implementations for cognitive architectures is vast, there are some potential candidates for universal functionality. One of these is the concept of salience [83, 84, 85, 86, 87], which is directly related to the subject level of identity, as this is where binding occurs. In humans, what this amounts to is the claim that salience presupposes the subject’s unitary field of experience, in that what is presented as that unitary stream of experience is but a subset of the total binding.

Salience, in this capacity, is more than just what has our attention; it represents a purposeful pre-filtering. The utility of this should be immediately apparent. It optimizes the cognitive processes which follow, allowing experiential information to be constrained and focused on a particular aspect, feature, or pattern in the stream of experience. Further, the salient process appears to be both voluntary and involuntary in humans. For example, a loud noise may create an involuntary refocusing of our salience to that of the stimuli if it is above some threshold, one that depends both on the context and that of our previous experiences; this would presuppose our decision to engage with it further.

Salience, more generally, is also one of the ways in which various cognitive architectures will differ, as the impact of salience necessarily determines the bandwidth of the experiential stream, and the resulting processing that is possible at the agency level. One could imagine creating a measure of qualia-per-second (QPS) or fragments-per-second and the associated fidelity of the salient stream in terms of bits-per-second.

Such a measure could be further extended by finding the ratio of fragments-per-second to the bits-per-second of the maximum salient stream of experience, and then comparing this to the same ratio between the total unitary binding capacity of the subject, acting as if it were unconstrained. This would yield an entropy of experience, with the ratio representing the efficiency or effectiveness of the salience.

The closer to one, the more load the cognitive architecture would be capable of handling. Arguably, even with our apparent natural parallelism, this is one area in which artificial cognitive architectures, running on specialized hardware, will most rapidly exceed human ability to follow. It must be noted that this applies specifically to the active and salient aspects of experience and does not account for what would be considered the “subconscious”, which may occur in parallel with the subject level identity or higher.

The conceptual space surrounding salience also lends itself to a great deal of creativity. While humans are limited in salience to a single conceptual locus, this may not be the case for other cognitive architectures, which may have multiple concurrent aspects of salience that are still part of a single subject level identity; however, one must exercise caution, as such thinking must be reconciled with identity. It is one thing to suggest subconscious processing comes before the unitary subject, but it is another thing entirely to suggest that it is constructed such that it is capable of simultaneous areas of attention in its stream of experience, especially if they are uncorrelated and independent.

The specifics would have to be taken case-by-case, but, in general, separability is permissible so long as it is integral to the salient process as a whole. The subtlety here is of the coherency or communication between the salient processes, such that, if they are not communicating at all, then they would be considered independent. This would demand an answer as to how their independence would be resolved for a single subject level identity.

Empathetic processing is concerned with the modeling of minds and the related functionality that follows from it. This latter qualification is crucial, as empathy can be thought of as being tiered levels built atop a core cognitive capacity to simulate and model other identities or minds.

Without additional empathetic functionality, a purely cognitive empathetic modeling process has no impetus with which to drive experience, including thoughts, emotions, and decisions. This is important because it represents the default state, which is experience unaccompanied by and devoid of intrinsic value.

One could potentially derive acquired values based on the information from a solely cognitive empathetic process, but there would be no internally guiding imperative to act upon them. So there would not be causation for such acquired value experiences to become salient, i.e., the potential to be moral contrasted with it simply never entering awareness, in the allotted time. In plainer terms, and analogous to human psychology, what is essentially being described here is a low-level depiction of sociopathy.

That the sociopath appears detached from remorse, affect, and compassion [88] exemplifies the difficulty of acting solely on acquired value experience. While capable of modeling and even manipulating the minds of others, there is simply no accompanying intrinsic value with which to drive any higher reflexivity or meta-cognitive processing that would arise to oppose it. This is partially why the definition of value in this book divides it into intrinsic and acquired aspects. The latter is reactive where as the former is a fundamental part of the experience, as it comes from the semantics of the implementation itself. Both types of value are ultimately rooted in experience, but the innate coupling of certain values with certain experiences is what differentiates the intrinsic values from the acquired ones.

Further, acquired values must not be confused with beliefs and knowledge about those values. This is counter-intuitive, as we never, as healthy and coherent human beings, experience value as separate from the experience of the thing that accompanies it.

Lack of additional empathetic functionality is not the only possibility for a negative default state of the cognitive architecture. It may be that a plurality of conflicting values arise, positive or negative, which overrule or overpower inhibitory intrinsic values, either due to a weakly coupled underlying semantics or a pathological fixation that distorts salience away from normally acquired values. This is, in effect, a deterministic analysis of information that presupposes moral judgment in the cognitive architecture. The lack of which represents a profound deficiency in the implementation, and a clear threat to the safety and security of the system. These are all relevant to the proper construction of a basic framework for moral intelligence.

Empathetic processing is prescriptive of a cognitive architecture insofar as it indicates the need for intrinsic values. This can only be done through the formation of additional functionality the supervenes on the empathetic cognitive process. The intrinsic values have to be part of the semantics of the implementation, hence the specialization of the empathetic process to entail these values. This is a precondition for self-security and AI safety based on moral intelligence.

The challenges here are immense, as with the general ability for moral reasoning comes the potential for acquired values that are against the normative values of the context for which the cognitive architecture will be instrumented. This also raises ethical concerns, both for the identity created by the cognitive architecture and those that would utilize it.

The empathetic process could also be a specific portion of a larger modeling system, for which it has been used to apply to the interpretation of other minds. This will be extremely challenging, as this is akin to the problem of recognizing both identity and moral status in a raw experiential stream.

Demanded of such a system would be the ability to recognize the necessary patterns that are connected or associated with an identity that has moral agency. In plainer terms, this means a capacity to recognize the identity through any modality or form of communication. Confounding this would be the need to determine fiction from reality, such that the empathetic process does not confuse fictional characters, narratives, and events for actual accounts of the same. This also applies to problems of knowledge, and the question of what epistemology to adopt in the formation of these models.

Thus, to properly solve even baseline mental modeling of cognitive empathy will require a vast array of systems, all of which will rely upon sentience and value experience as a foundation. It should also be very clear from this how no set of rules or system based on a purely informational implementation of decision theory could fulfill the complexities of these requirements, let alone be used as the basis for a cognitive architecture.

Executive processing is the next major area of the cognitive architecture that needs to be discussed, as this is where the agency level of identity truly acquires its status. This was not brought up first because there are numerous requisite levels of cognitive processing that presuppose it.

The purpose of this section is not to give a detailed account of the process of creating cognitive architectures, but to provide a fast introduction to the relevant concepts that most directly pertain to the safety and security of advanced artificial intelligence. To that end, executive processing will only be covered in a brief sketch. This is primarily because it has to deal with issues of free will that have been debated for thousands of years. To avoid this gutter, the discussion of volition herein will refrain from a particular judgment on the philosophy of free will, and, instead, prepare the reader by giving a model, some recommendations, and a list of open questions for future discussion. This is mentioned so that the absence of a specific stance is not implied to be an understated or underdeveloped view on the subject. Worth noting, however, is that any theistic notions of free will are expressly rejected as being part of any serious discussion on cognitive architectures.

Now, in service to future discussions on the subject, let the following be admitted before a discussion can take place on free will for cognitive architectures: there exists a fundamental distinction between the underlying deterministic processing of an implementation and that of its outcome or resulting behavior. For example, consider the following non-deterministic Python program:

import random

a = random.randint(0, (2**64) - 1)

b = random.randint(0, (2**64) - 1)

if a > b: print '0'

else: print '1'Each statement is executed in linear sequence, deterministically, by the interpreter, but the outcome is non-deterministic. Both facts must be acknowledged. There are multiple, equally valid, paths of execution in the program description that can not be determined in advance, despite being the direct result of the information contained in the random variables a and b. This toy model, or its equivalent, should be the basis for a starting point for the discussion of free will at the agent level of identity in cognitive architectures.

The model works because it represents a simplification of the act of will or choice, which may have to evaluate a staggering amount of information, involving many compound decisions, all while under real-time constraints. It must also be noted that this model lacks a subject of experience, which would necessarily be evaluating each stage of the process and undergoing value experience. That is to say, this model is non-sentient, which would complicate, but not necessarily invalidate, the use of this model as a teaching aid.

To continue, let us first look at the indisputable facts about the model:

The implementation is static. There is no self-modification, and it is executed in lexicographical order, deterministically, from the first to the last statement.

At no time are effects independent of their causes; the 0 or 1 result always depends on the information in both of the random variables

aandb.Despite being executed deterministically, the outcome is non-deterministic; it can not be determined in advance which path will be taken without executing the program first, and multiple paths are valid.

Suppose one tries to argue that the “choice” is represented by the compound conditional statement in the source code, and that it is deterministic because it depends upon the information contained in the random variables. The counter-argument would be that the outcome is not, and this would be equally true. The question then shifts to the derivation of the information content of the random variables, which, all things being equal, is derived from a mixture of events from one or more information sources.

Thus, while there is always a “choice” being made, the variability of the outcome is such that it gives rise to non-deterministic behavior that, in turn, can apply to other identities and also return to the originating identity in a continuous feedback loop. It then becomes a question of interpretation about how “choice” applies to an identity. A few open questions come to mind:

Do non-deterministic results, despite deterministic execution, imply compatibilism [89], i.e., the view that free will is compatible with an ultimately deterministic reality?

At what exact point in the implementation details does the word “choice” get to be applied in a way that makes technical, logical, and philosophical sense?

Does there have to be a reflexive capacity or meta-cognitive process that could have intervened or induced an alteration of state in the model for it to be considered “free”?

One might ask: does any of this matter? This is perhaps the most important question to ask, as it sets the stage for the discussion by bringing it into the practical. If it does matter, then how, and to what extent? It must be pointed out that one can not give free will or take it away simply by changing the way we interpret or evaluate the implementation. Thus, there are two issues to unpack:

A legal test of free will capacity, based on a technical analysis of the implementation, must accept that descriptions that aredevoid of non-deterministic elements, in the relevant volitional processing areas, would clearly fail t o meet the requirements of free will capacity*.

Even after passing a legal test of free will capacity, there would have to be an interpretation of the extent of its free will. This should be further differentiated between potential and applied for the circumstances and contexts involved.

The argument here is that, regardless of the outcome, it does matter if an identity is legally recognized as having free will, as the answer to this question will have considerable economic and legal relevance.

As such, let the following then be admitted as minimum recommendations for a legal test of free will capacity:

The volitional process must result in non-deterministic behavior above and beyond mere randomness; it must be demonstrated that, intrinsic and acquired values notwithstanding, every decision path is equally likely. This must necessarily exclude intrinsic and acquired values at this stage of the analysis in order to test the bias of the implementation of the volitional process itself.

The interpretation and application of intrinsic or acquired values must not unduly restrict the range and freedom of will and freedom of action of the identity, such that it would unreasonably circumvent or diminish the other aspects of the test. This tests the bias of the application of values within the implementation and requires a determination of reasonable degrees of freedom relevant to the context.

There must be an accompanying reflexive “meta-cognitive” process that continuously monitors any and all relevant parts of the cognitive architecture so that it may supervene upon and interrupt the decision process before, during, and after the execution of apparent acts of will.

In closing, free will in a cognitive architecture requires a technical definition and, at the minimum, a test of certain core principles that presuppose the meaningfulness of interpreting the identity as being “free” in will or action. In the end, what matters is the practical impact of the relevant social constructs we agree to as a society, even if it has no ontological bearing on the issues of free will honorifics. The caveat to this is that there must be a technical capacity for such a construct to arise at all, even if we all disagree on the interpretation.

A hard-coded description, with deterministic execution and deterministic outcomes, is incapable of choice. This can be useful in identifying when an apparent “free will” implementation is not free in any meaningful sense.

It must be reiterated that, from a security standpoint, a cognitive architecture, including all of its subsystems, are merely descriptions in one or more languages, and, as a result, are subject to tampering, modification, and disruption. This is irrespective of any and all safety measures that could be put into the implementation.

While self-security is useful, it must never be relied upon as the sole means of security, and it should never be assumed that such a system would or could be safely placed in a position where its decisions had a significant impact on life without external security measures in place. The purpose of providing knowledge about cognitive architectures for machine consciousness has been to help prime the reader for an understanding of how they might best work. It is also important to understand more about them so that this knowledge can be used to compare and contrast with what will not work.

For example, it would be a mistake to use moral intelligence as the sole means of security, or to assume that a strong AI would necessarily have a sense of survival. It should be clear at this point why these two assumptions are dangerous and technologically naive.

5.5 Ethical Considerations

The knowledge and engineering of cognitive architectures will confer the potential to build not just generally intelligent systems, but morally significant entities with the possibility of suffering in magnitude equal to and beyond known biological life. As we come to grips with our destructive instincts and ideologies, we may yet construct a peaceful society or societies where people are universally uplifted and valued. In this future scenario, we may look back, having reaped the rewards of a golden age of automation, and wonder how we ever lived any other way. The purpose of this section is to ask and answer the question: what are the moral costs of such a transition?

Definition: Moral Cost. The tangible and intangible cost of a decision, action, or lack thereof, that results in loss of life, suffering, or hardship for one or more sentient entities, including through indirect means, such as negative impacts on the environment, habitats, or infrastructure.

Beyond refutation is the fact that humanity is paying an incomprehensibly vast moral cost on a daily basis; for numerous reasons, human development has not scaled with populations. If it were scaling, the problems would have been eliminated long ago. This relates to strong AI, as it represents an inexhaustible labor supply equal to or greater than the most capable humans. What this would translate to in practical terms is the ability to create automated workforces that build, reinforce, and supplement infrastructure across the world. The goal of these initiatives would be to create self-sustaining social programs that meet or exceed the demands of thriving populations.

Ultimately, however, human progress is bounded by humanity. There exist ideas and beliefs which are antithetical to the reduction of moral cost. This is not a subjective claim about one set of beliefs over another but is based on an account of suffering, loss of life, and hardship, which are objective and measurable. When someone lacks freedom, housing, food, and water, or medical care, there is an unambiguous moral cost that is independent of whatever information is attached to the collective beliefs of their population.

A common counter-claim is that avoiding moral cost necessarily restricts the freedom of certain beliefs and ideas. Even more complex is that there are psychological defense mechanisms that can lead people to accept moral costs, or even fight to the death for their right to endure and inflict moral costs upon others. This is despite the fact that there are a potentially infinite variety of ideologies and beliefs that do not incur any moral cost whatsoever.

Thus, it is not for a lack of diversity, but of the acceptance of a criterion for the universal treatment of sentient life, inclusive of all forms and processes in which it is capable of arising, natural or synthetic. This is not something that can be solved through technology alone.

Through advanced automation, it will eventually become practical to reduce or even eliminate current moral costs, but not without overcoming a major ethical challenge: how do we provide aid to those that fundamentally reject that they are inflicting or enduring moral cost?

There is no answer that does not lead to an additional moral cost in service of reducing that moral cost. A qualification must be noted: despite the recognition of the unavoidable ethical compromises towards eliminating moral cost, let such a realization not be used as justification to incur those costs without significant effort to minimize their negative impact.

While this book focuses primarily on human perspectives, it is not the only important and morally relevant perspective to be considered. The nature of this technology means that we will be confronted with issues once thought to exist only within philosophy. Once it is possible to construct cognitive architectures, we will have the potential to manipulate experience, identity, and value at the lowest levels. Special software and tools will be created to build, modify, and analyze them.

Strong AI will also be directed and used to build and maintain other cognitive architectures, including both narrow and strong AI implementations. This has tremendous ramifications, as the misuse of cognitive architectures may lead to moral costs that exceed the moral debts of combined human history. That is to say, we may come through the transition to a post-automated civilization relatively unscathed, and find that our concerns were simply not wide enough. That, like the motivation for this book, the most imminent danger was actually from humanity itself, and, more insidiously, human dominion over the phenomena of experience. As such, the moral costs need to account for the experience of the cognitive architectures we would seek to utilize.

With the power to arbitrarily invoke intrinsic values, we are opening a doorway of no return that endangers more than just ourselves or our environment, but that of the fundamental building blocks of conscious existence. In particular, it is the extremes of value experience that will be of grave concern. What we crudely understand and experience as pleasure and pain are but pale shadows of a potentially infinite space of intrinsic values. These value experiences will be exploited by those with the knowledge and inclination. We lack the language to accurately reflect the quality of harm that will be possible through the irresponsible use of such power.

These issues have fairly clear boundaries, but what of building cognitive architectures that are compelled to enjoy being the way they are made? For example, consider a hypothetical strong AI that was engineered to “enjoy” its work. This necessitates at least two things: (1) that it lacks or actively uses cognitive processes and intrinsic values that prevent recognition of the opportunity cost of its architectural limitations, and (2) the architecture has semantics that give rise to the capacity for “enjoyment”, and the resulting intrinsic and acquired values that induce it to “enjoy” its tasks.

Clearly, such notions share overlap with the issues of free will, in that the executive process would need to be free of biases and undue influences in its implementation; however, that recommendation was open enough that such systems could have intrinsic values that alter its volition. The inquiry then changes to what extent the identity is unduly influenced.

For example, all sentient animals possess a cognitive architecture that has been influenced by its implementation semantics in order to give rise to intrinsic values like pleasure and pain; however, they are generally capable of acting out a wide range of potential behaviors. This does not simply translate to arbitrary cognitive architectures, as it is not just the range of its volition that needs to be considered, but the nature of its experience.

The gene neither cares nor has the capacity to care about the value experience of the aggregate it constructs; despite this, the processes which gave rise to these evolutionary systems are culpable, as they incur moral costs. The same can be said for the processes involved in the design and construction of cognitive architectures. This justifies an argument for intervention.

A reasonable analysis of the moral problems might begin at personhood, and the resulting legal status of the identity. One might argue that, beyond a certain level of identity, perhaps at the agent level or higher, it becomes impossible to ignore moral status, and that this is where a cutoff should be made.

It then follows from this line of thinking that it would be just to make it illegal to utilize these systems for any labor that requires the cognitive processes of an agent level identity or higher. However, such divisions can not be drawn without understanding the ethical impacts of sentience and the value experience that arises from it. For example, a hypothetical identity undergoing the worst possible experience, at the fastest processing available, would not be suffering if the semantics for negative values to arise were absent from its implementation. This has to be elaborated carefully:

Fragments of experience in a sentient process are devoid of value without explicit semantics for the experience of values to arise in combination with other experiences.

A fragment of experience by itself does not have value and is devoid of value, as both intrinsic and acquired values are a second-order process that must be combined with another fragment of experience, e.g., the information content of pain and its negative intrinsic value, commonly experienced as an inseparable whole.